2023. 3. 27. 23:11ㆍ🧑🏻🏫 Ideas/(Advanced) Time-Series

1. Introduction

시계열 분석, 특히 예측 문제(Forecasting)는 애너지 소비, 트래픽, 경제지표, 날씨, 질병 등 다양한 도메인에서 활용되고 있다.

실생활의 여러 응용분야에 있어서 때로는 시계열 예측의 범위를 더 크게, 멀리 확대할 필요성이 있는데, 이는 결국 장기 시계열을 다루는 문제와 직결될 수 밖에 없다.

이러한 상황에서 "트랜스포머"는 long-range dependence"문제, 즉, 장기 의존성 문제를 self-attention 매커니즘을 통해 해결하여 이러한 요구를 충족하였고, 실제로 많은 트랜스포머 기반 모델들이 여러 연구에서 큰 진전을 이루어냈다.

그런데, 그러한 연구성과에도 불구하고 long-term 기반의 예측 문제는 여전히 매우 어려운 일로 남아있다.

본 논문에서는 그 이유로 2가지를 제시한다.

1. 장기 시계열의 dependencies는 매우 "복잡한 변동들"에 의해 가려져 있기에 그 Temporal Dependency(시간 의존성)을 효과적으로 파악하기 어렵다.

2. 기본 트랜스포머의 self-attention 기반 모델들은 그 계산 복잡도(quadratic complexity)에 의해 장기 시계열의 계산에서 큰 한계점을 가진다.

그런데 2번 원인 같은 경우, 계산 복잡도를 완화하는 여러 연구들과 그 변형모델들로 인해 상당부분 진전이 있었다.

하지만, 그러한 변형모델들은 대부분 "Sparse"한 bias를 통해 attention의 효율성만을 높이는 일에 치중되어 있었다.

(본 논문에서는 그러한 모델들을 point-wise representation aggregation이라고 한다.)

그들의 한계점은 계산 효율성만 달성할 뿐, spars-point-wise connection으로 인해 시계열의 정보를 잃게되는 문제가 있다.

따라서 본 논문은 "Temporal Dependency"을 효과적으로 포착하면서 "계산 효율성"까지 동시에 이루어 내는 모델을 연구하였다.

본 논문에서 제시하는 모델인 "Autoformer"는 먼저 시계열 정보를 충분히 활용하기 위해 시계열 분석의 전통적 방법인 "Decompose"(요소분해)의 아이디어를 활용한다.

여기서 decomposition은 단순히 전처리 과정에만 쓰이는 것이 아니라, 최종적인 예측에 있어서도 그 효과가 충분히 발현될 수 있도록 Architecture에 이를 깊이 반영하였다.

또한, 이 모델은 self-attention에 있어서도 point-wise한 방법이 아닌 "유사한 주기"를 가지는 "sub-series"를 활용하는 "series-wise"한 방법을 포함한다. 그것이 바로 "Auto-Correlation" 매커니즘으로, 유사한 주기를 가지는 sub-series를 자기상관성을 통해 포착하여 통합(aggregate)하는 방법이다.

결과적으로 이 논문의 저자는 이러한 Architecture와 series-wise mechanism이 그 복잡도와 정보활용 측면에서 더 좋은 구조를 지녔다고 말하며, "SOTA"의 정확도 등 실험을 통해 이를 입증하였다.

*트랜스포머 참조

https://seollane22.tistory.com/20?category=1012181

Attention Is All You Need(2017) #Transformer

"Attention is all you need", 이 논문은 기존 seq to seq 모델의 한계점을 보완하고 성능을 크게 개선한 Transformer 모델의 등장을 알린 기념비적인 논문이다. 현재 NLP와 같이 seq to seq 형태의 데이터를 처리

seollane22.tistory.com

2. Related Work

2-1) Models for Time Series Forecasting

이 단락에서는 먼저 TS Forecasting에 적용되어 온 기존의 모델들을 간략하게 설명하고 있다.

1. ARIMA: 고전적인 통계모델로, 비정상시계열을 "차분"하여 정상시계열로 만들어 모델링한다.

2. RNNs: 고전적인 딥러닝 모델로, 순차적으로 인풋을 투입하여 이전 시점의 정보를 다음 시점에 순차적으로 반영한다.

3. DeepAR: RNNs에 Auto-correlation을 결합하였다.

4. LSTNet: reccurent-skip connections: CNN을 결합하였다.

5. Attention-based RNNs: RNN base에 장기 종속성을 탐지하기 위해 temporal attention을 도입하였다.

6. TCN: causal convolution으로 시간적 인과성을 모델링한다.

7. Transformer based models :

트랜스포머 기반의 self-attention 매커니즘은 sequential task에 좋은 능력을 보여주었다.

그러나, 장기 시계열을 예측하는 문제에 있어서는 그 복잡도가 인풋 길이(시계열 크기)의 제곱이라는 quadratic complexity를 보인다. 이러한 메모리, 시간적인 비효율성은 시계열 트랜스포머 연구자들의 주된 관심사였고 많은 연구에서 이를 개선한 모델을 제안하기도 하였다.

- Logformer, 지수적으로 증가하는 interval을 두고 time step을 설정하여 attention을 수행하는 LogSparse attention을 제안한다.

- Reformer, local-sensitive hashing (LSH) attention을 취하여 계산 복잡도를 줄였다.

- Informer, time step 간의 중요도를 산출하여 그 중요도가 높은 것에 attention을 수행하는 ProbSparse attention을 제안한다.

여기서 주목해야 할 것은 이들은 모두 기본 트랜스포머 모델을 이용하고 있으며(Informer는 제외), point-wise한 기법이라는 것이다. 앞서 설명했듯이, 이러한 기법들은 시계열의 복잡한 변동들을 추려내지 못하기 때문에 depedency를 효과적으로 파악하는 데 어려움이 있다.

이에 Autoformer에서는 장기 시계열의 dependency를 효과적으로 파악하고자 point-wise가 아닌 같은 periodicity를 기반으로 한 series-wise 기법을 적용했다. (Auto-Correlation 매커니즘)

2-2) Decomposition of Time Series

시계열 분석의 standard한 분석방법인 Decomposition(요소분해)은 시계열의 변동을 여러 요소로 분해하는 분석방법을 말한다.

그 요소들은 크게 Trend(추세), Seasonality(계절), Cycle(순환), Random(무작위) 변동이 있는데, 이 변동들은 시계열이 형성되어 온 과정을 더 잘 보여주므로 예측에 있어서도 큰 도움이 될 수 있다.

그런데 이러한 요소분해는 과거 전처리 과정에서만 이용되어왔다. 이러한 제한적인 이용은 먼 시점의 미래를 예측하는 데 있어서 그 변동 간의 "계층적인 상호작용"을 간과하게 된다. (이는 장기 시계열 예측을 어렵게 한다.)

따라서 Autoformer에서는 요소분해의 효과를 충분히 이용하고자 이것이 모델의 내부에서 기능하도록 여러 블록을 배치하였고, 이를 통해 내부에서 hidden series를 점진적으로 분해하도록 설계하였다.

이는 전처리 단계에서 뿐만 아니라 결과를 예측해나가는 전체 과정에서 요소분해가 효과적으로 이용되도록 한 것이다.

3. Autoformer

본 논문에서는 이 단락부터 본격적으로 Autoformer의 매커니즘과 그 구조를 하나하나 자세히 설명하고 있다.

Time-series forecasting은 I 만큼의 시리즈가 주어졌을 때, O 만큼의 미래 시리즈를 예측하는 문제로 정의할 수 있다. (input(I)-predict(O))

그런데, 여기서 장기 시계열 예측 문제는 O가 큰 것을 말하는데, 앞서 언급했듯이 이것에는 크게 두 가지 문제가 있다.

1. 복잡한 시간적 패턴을 처리하기 힘들다.

2. 계산의 비효율성을 가지고 시계열 정보를 충분히 활용하지 못한다.

따라서 이를 개선하는 Autoformer의 차별적인 특징은 다음과 같다.

Autoformer의 차별적인 요소

1. Decomposition을 전처리 뿐만 아니라 Forecasting 과정 곳곳에 배치한 Architeture를 디자인하였다.

2.Sparse한 time-step을 통합(aggregate)하는 point-wise한 기법이 아닌, Auto-correlation을 통해 같은 주기를 가지는 time-step을 통합하는 series-wise 기법을 적용한다.

(매커니즘의 변화, Attention -> Auto-correlation)

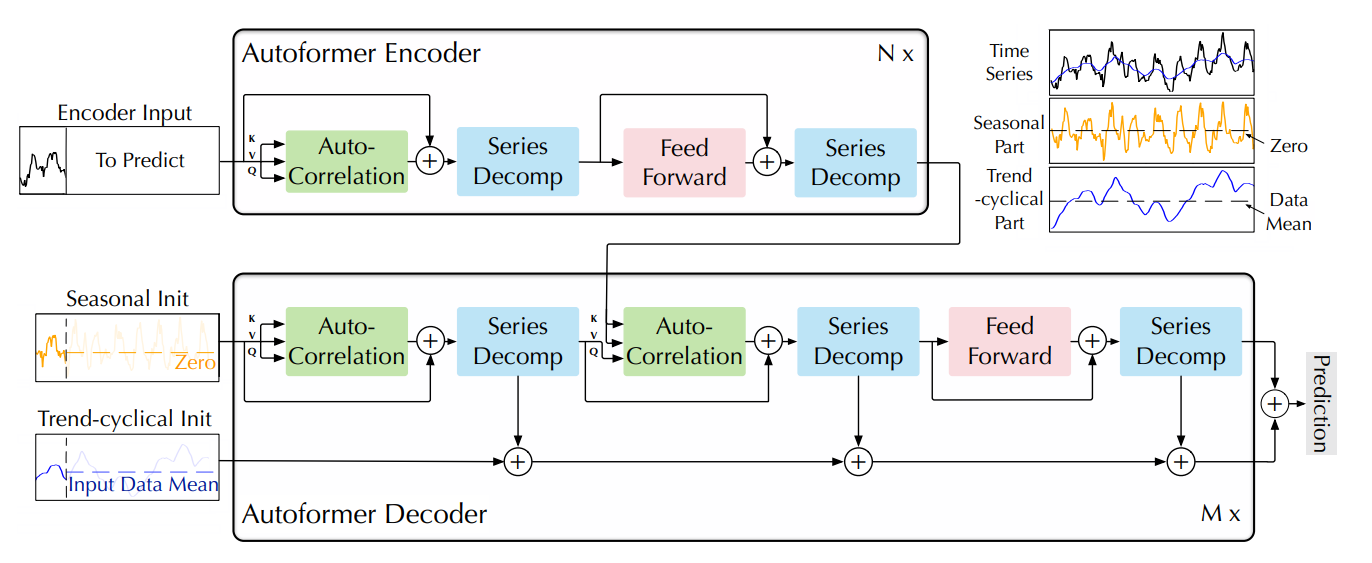

3-1) Decomposition Architecture

Decomposition은 시계열을 추세/주기 변동과 계절 변동으로 분리하는데, 각각은 시계열의 장기적인 추세 변동과 상대적으로 단기에 고정된 주기(보통 1년)로 움직이는 계절적인 변동을 나타낸다.

Autoformer는 인풋을 구성하는 전처리뿐만 아니라 내부에 series - decomposition 블록을 가지고 있다.

밑의 그림에서 하늘색 블록이 이를 나타내고 있는데, 이는 모델 내에서 hidden series를 점진적으로 분해하는 역할을 한다.

이는 인코더와 디코더 내부의 결과값들을 분해함으로써 결과적으로 "the long-term stationary trend"(장기적으로 반복되는 추세)를 더 자세히 추출하는 기능을 한다. (자세한 과정은 아래에서 설명)

구체적으로 Decomposition 과정을 살펴보려면 이 모델에 들어가는 input을 먼저 살펴봐야 한다.

Model inputs

Autoformer의 인코더, 디코더에 들어가는 인풋들은 아래의 그림과 같이 형성된다.

* seq_len(input series) : 인풋의 전체 크기 (논문에서 I)

* label_len(start token series) : seq_len에서 레이블의 크기 (seq_len의 절반)

* pred_len(padding) : 예측하는 시점의 크기(논문에서 O)

여기서 인코더의 인풋으로는 seq_len이 들어가는데, 그 인풋 시리즈를 절반으로 나눈 뒤 더 최근 시점의 절반(1/2)을 label로 학습한다.

한편 디코더의 인풋은 앞에서 자른 label_len를 요소분해(Series Decompose)하여 형성되는데, 여기서 주목해야 할 것은 바로 Series Decompose와 padding이다.

디코더는 lable_len을 Series Decompose한 Trend와 Seasonality를 인풋으로 받는다. 그런데, lable_len은 seq_len의 절반이기 때문에, 그 길이를 맞추기 위하여 padding을 적용한다.

이때 Trend는 인코더 인풋의 Mean(평균)값을 패딩하고, 인코더의 정보와 결합하는 Seasonality는 예측을 위해 0으로 패딩한다.

(위 그림 참조)

구체적으로 Decomposition 과정을 살펴보면 다음과 같다.

$$X_{t} = \mathbf{AvgPool}(\mathbf{Padding(X)})$$

$$X_{s} = X - X_{t}$$

$$X_{t}:추세변동$$ $$X_{s}:계절변동$$

위 식은 이 모델의 핵심요소인 Decomposing의 과정이니 잘 기억해둘 필요가 있다.

먼저, 이 모델에서는 주기적인 변동을 평활화하고 장기적인 추세를 강조하기 위해 MA기법인 AvgPooling을 사용하며, 시계열의 길이가 바뀌는 것을 막기 위해 padding을 이용한다.

(이후에는 위 과정을 요약하여 다음과 같은 함수로 표기한다.) $$SeriesDecomposition(X)$$

위 과정을 거쳐, Autoformer는 다음과 같이 디코더의 인풋을 형성한다.

$$X_{en_s}, X_{en_t} = SeriesDecomposition(X_{en_\frac{1}{2}:i})$$

$$X_{de_s} = Concat(X_{en_s},X_{o})$$

$$X_{de_t} = Concat(X_{en_t},X_{mean})$$

위 설명과 수식을 이해한 뒤에 아키테처를 보면 이 모델의 decomposition과 그 전체적인 흐름을 잘 파악할 수 있다.

Encoder

인코더는 input series 전체를 받아 Auto-Correlation 레이어를 통과시키는데, 그 내부에서 유사한 주기를 가지는 sub-series를 통합한다. (Auto-Correlation을 구한 뒤, sofmax 함수를 통해 스코어를 산출하여 sub-series를 가중합)

그 뒤에 Series Decomposition을 진행하는데, 인코더의 Series Decomposition은 Seasonal 변동만을 남기고 나머지 Trend(+Cyclical)변동을 제거한다.

Seasonal, 즉, 계절변동은 상대적으로 짧은 주기로(통상 1년) 반복되는 변동을 말하는데, 이는 앞서 언급한 "the long-term stationary trend"(장기적으로 반복되는 추세)에 집중하기 위함이다.

결과적으로 요소분해를 통해 남은 계절변동을 FFlayer에 통과시킨 뒤 다시 한번 요소분해를 진행하여 하나의 인코더에서 만들어내는 최종 output을 산출한다. (Encoder N개의 중첩 by 하이퍼 파라미터 N)

Decoder

디코더는 앞서 설명한대로 input series의 절반인 label_len을 받아 Series Decomposition을 먼저 진행한다.

이후 마찬가지로 Seasonal 변동을 Auto-Correlation 레이어를 통과시키는데, 인코더와는 다르게 Trend(+Cyclical)변동을 버리지 않고 Series Decomposition 블록을 통과할 때마다 분리되는 Trend(+Cyclical)변동들을 더해준다.

이후 타 트랜스포머 모델의 Encoder-Decoder Attention처럼, 인코더의 최종 아웃풋(Seasonal 변동)을 받아 디코더가 산출한 중간 아웃풋(Seasonal 변동)을 받아 둘을 맵핑하는 Auto-Correlation 레이어를 통과시킨다.

이후 마찬가지로 그 결과값을 Decompose 후, FFlayer에 통과시킨 뒤 다시 한번 요소분해를 진행한다. 그리고 최종적으로 계속 더해주던 Trend(+Cyclical)변동을 합쳐 하나의 디코더에서 만들어내는 최종 output을 산출한다. (Decoder M개의 중첩 by 하이퍼 파라미터 M)

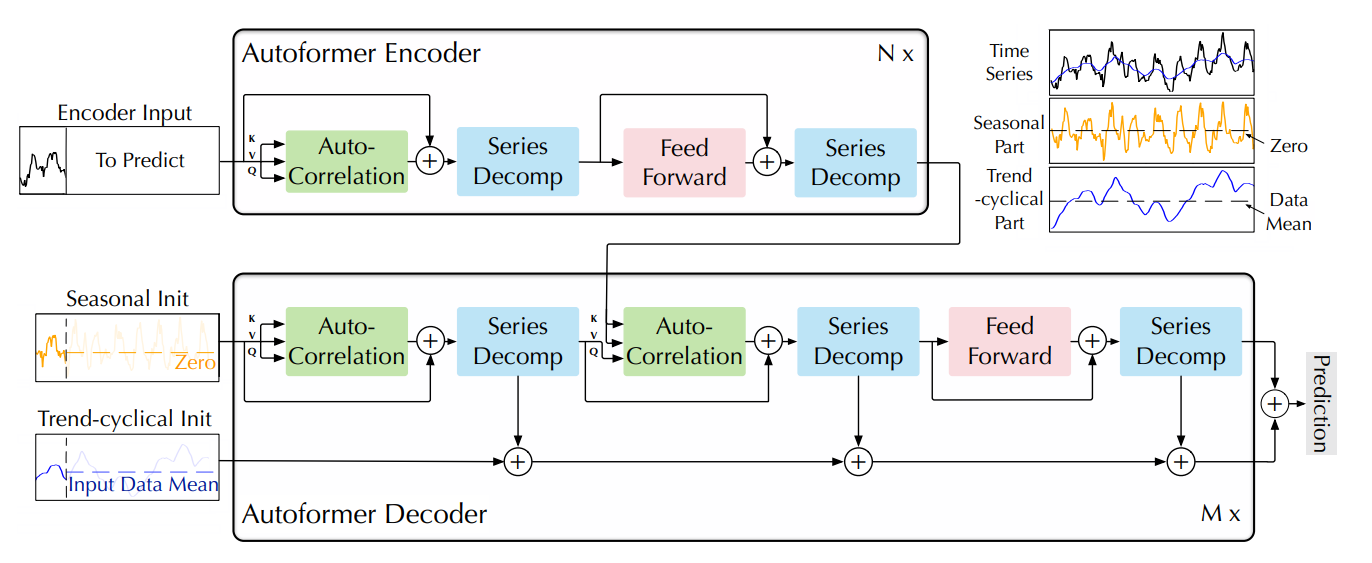

+ Positional Encoding

다른 트랜스포머 기반의 모델들과 마찬가지로 이 모델 또한 위치정보를 넣어주는 Positional Encoding이 필요하다.

기본 트랜스포머(Vanilla)는 local한 정보만을 추가하는 것에 그치는데, 효과적인 장기시계열 예측을 위해서는 더 global한 time-stamp를 넣어줄 필요가 있다.

그러한 time-stamp에는 hierarchical time stamps (week, month and year)와 agnostic time stamps (holidays, events)가 포함된다.

이는 "Informer"에서 제안된 것과 같은 것으로, 먼저 인풋 시리즈를 d_model에 맞게 project 하여 u를 만든다.

그 후 Local Time Stamps는 sin, cos함수에 따라 "fixed" position을 embadding하며, Global Time Stamps는 각 위치정보를 "learnable embadding"을 통해 넣어준다.

3-2) Auto-Correlation Mechanism

Autoformer가 가지는 또 다른 차별적인 요소는 "Auto-Correlation"매커니즘이다.

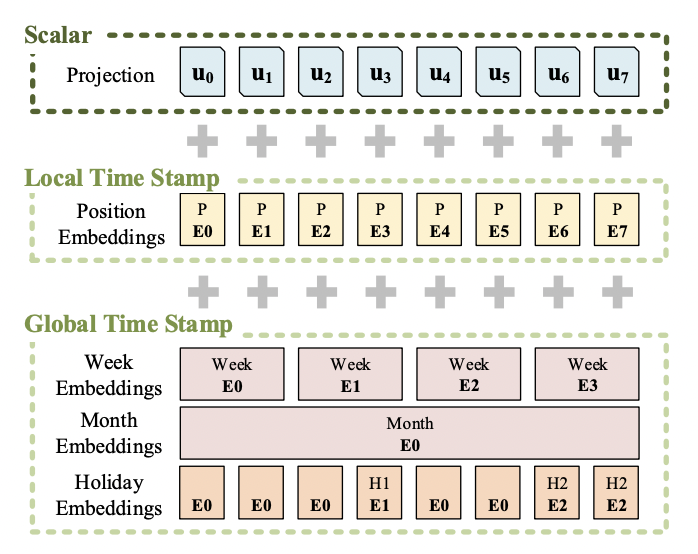

기존의 트랜스포머 기반 모델들은 attention 매커니즘을 통해 과거 포인트간의 유사성을 구하는 반면, 이 논문에서 제시하는 Autoformer는 기존의 어텐션을 자기상관성을 이용한 매커니즘으로 대체한다.

이러한 방식은 논문에서 표현하길, 기존의 "point-wise"가 아닌 "series-wise"한 방법이다.

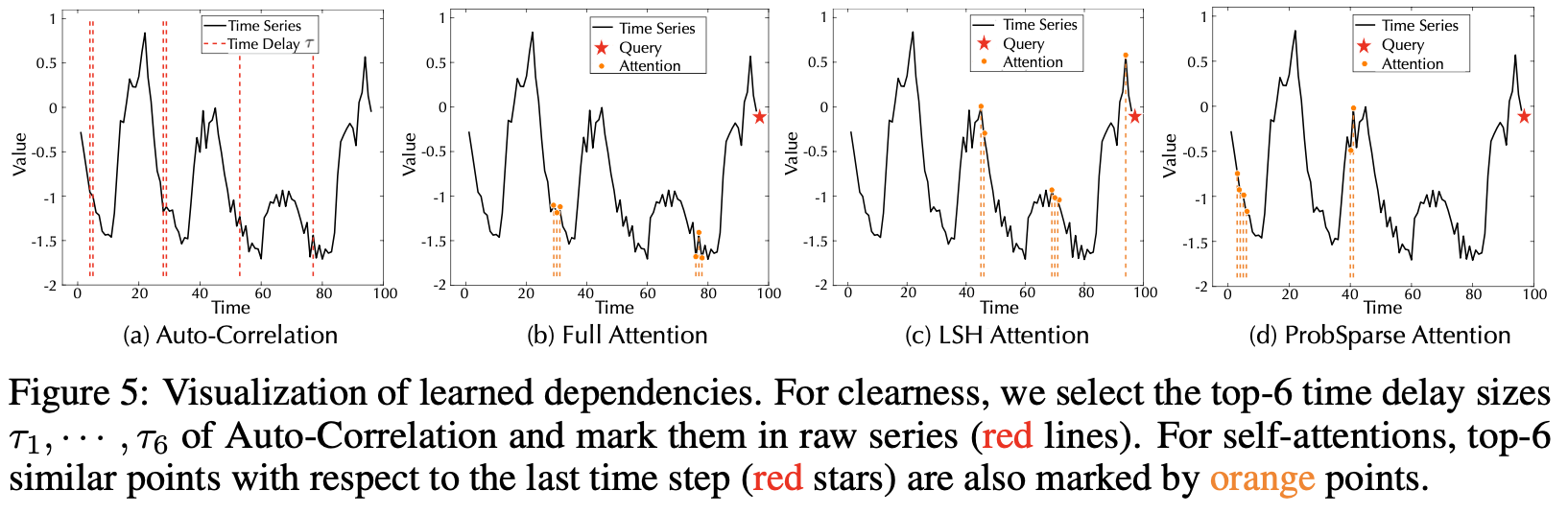

위 그림에서처럼, (d)를 제외한 전통적인 어텐션(a)이나, sparse한 방법을 도입한(b),(c)들과 달리 (d)는 어떠한 지점을 기준으로 dependence를 뽑아내는 것이 아니라 유사한 흐름을 보이는 series를 기준으로 Period-based dependence를 뽑아낸다.

이때 그 유사한 series를 판단하는 매커니즘에 시계열 데이터가 가지는 속성인 자기상관성(Auto Correlation)을 이용하는 것이다.

시계열 데이터의 특성상, 기본적으로 변수의 변화에 따르는 것이 아니라 시간에 따른 자기 자신의 흐름에 의해 시계열이 진행되기 때문에 과거의 자기 자신과 상관관계를 가진다. 또한 시계열이란, 말 그대로 시간에 따른 데이터의 흐름이기 때문에 어떠한 포인트를 딱 잘라서 보는 것은 그 period에 대한 정보를 잃게된다. 따라서 본 논문에서는 어떠한 시점과 높은 자기상관성을 가지는 "sub-series"를 파악하여 연결함으로써 시계열이 가지는 정보들을 더 폭넓게 이용할 수 있게 된다고 말한다. (expand information utilization)

위 그림은 Auto-Correlation 매커니즘의 과정을 보여주고 있다. 왼쪽은 그 전체과정을, 오른쪽은 Time Delay Aggregation 블록을 나타낸다. 그 과정을 간단히 요약하면 다음과 같다.

1. 먼저 encoder-decoder auto-correlation 기준, decoder에서 Q를 얻고 encoder에서 K,V를 얻는다.

2. 이후, Q(de),K(en)를 FFT(Fast Furier Transformation)를 통해 빈도수로 변환한 뒤 그 둘(켤레 복소수)을 내적한다.

3. 그러한 결과값을 다시 inverse FFT를 통해 다시 타임 도메인으로 변환한다. (2와 3과정을 거치면 Auto-Correlation을 구할 수 있다.)

4. 앞서 구한 Auto-Correlation 결과를 바탕으로 유사한 series TOP k개를 선정한다.

5. 마지막으로 Time Delay Aggregation 블록을 통해, 예측길이에서 인풋길이로(S->L) Resize한 V(en)를 "τ"만큼 delay를 롤링하여(k개) 생성한 sub-series와, Auto- Correlation 결과들을 softmax함수에 통과시켜 생성한 가중치를 곱한다.

Period-based dependencies

내부적으로 동일한 phase position, 즉, 같은 자기상관을 보이는 sub series는, 같은 과정, 흐름을 가지고 결과적으로 같은 period를 보인다.

결국 위 매커니즘의 목적은 그러한 같은 Period에 기반한 dependencies을 뽑아내기 위한 것이라고 할 수 있다.

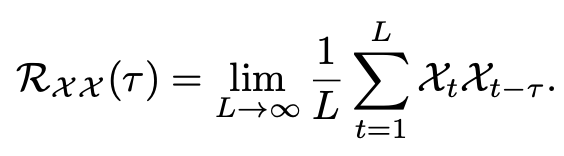

이에 Period-based dependencies를 뽑아내는 auto-correlation의 수식은 다음과 같다.

Time Delay Aggregation

auto-correlation이 높다는 것은 시계열 전체를 봤을 때 같은 주기를 가진다는 것을 의미한다고 볼 수 있다.

(결과적으로 auto-correlation은 같은 period를 찾는 과정이다.)

결국 그 dependence를 뽑아내기 위해서 위 식에서 도출된 R(auto-correlation)을 기반으로 같은 주기(period)를 가지는 sub-series(by τ)를 연결한다.

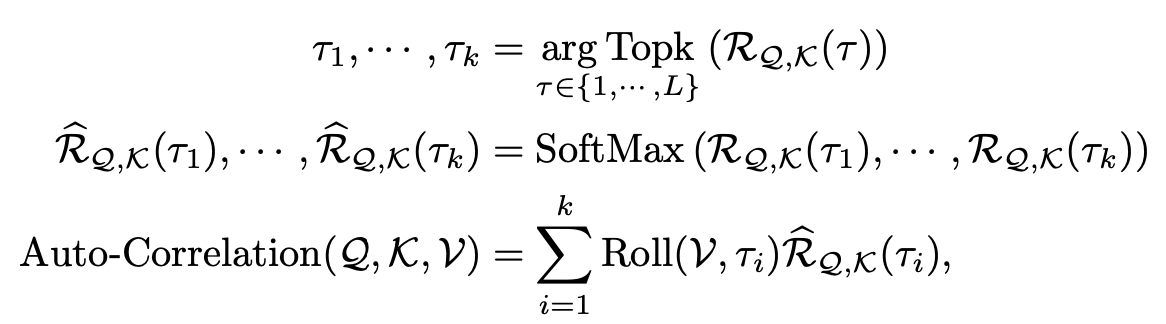

본 논문에서는 이렇게 시점이 다른 time delay를 가진 sub-series를 연결/통합하기 위해 다음과 같은 수식을 제시한다.

$$TimeSeries X, Length L$$

$$Topk(), top k개의 auto corrlation. k = c*logL (c=hyperparameter)$$

$$R_{Q,K}= Q,K간의 auto correlation$$

$$Roll{(X,\tau)}= 시리즈 X의 \tau만큼의 delay. (이때 \tau만큼의 뒷 시점을 맨 앞으로 shift한다. = sub series)$$

최종적으로 정리하자면,

1. Q와 K에 대해 Auto-Correlation을 구하고, 높은 자기상관성을 가지는(=같은 주기를 가지는) sub-series를 k개 뽑는다.

2. 이들을 softmax를 통해 Q,K의 유사도를 가중치로 산출한다.

3. 그러한 가중치를 V의 sub-series(by τ)들에 곱하고 통합(concat)한다.

최종적으로 이러한 과정의 결과물은 하나의 head로, 하이퍼파라미터로 지정한 head의 개수만큼 진행되어 마지막으로 concat한 뒤 각 블록의 가중치를 곱해 다음으로 넘겨진다. 이는 기본 transformer의 하이퍼파라미터를 포함한 그 과정과 동일하다.

Efficient computation

기본 트랜스포머가 가지는 L(O^2)의 복잡도와 계산 비효율성은 여러 논문에서 지적받고 있는 한계점이다.

따라서 트랜스포머 모델의 효율화는 여러 변형 모델들을 포함한 다양한 연구에서 연구되고 있는 중요한 방향 중 하나이다.

이에 이 모델에서는 forecasting 성능과 더불어 Auto-Correlation의 복잡도를 줄이는 방법 또한 제시하고 있다.

Autoformer는 모델의 복잡도, 계산의 효율성을 위해 "FFT(Fast Furier Transformation)"를 이용한다.

첫번째 함수 S는 Furier series의 제곱, 정확히는 켤레 복소수 내적(그림에서 Conjugate)을 의미하며 두번째 함수는 FT( Furier Transformation)로 빈도수 도메인으로 변환된 series를 다시 시간 도메인으로 변환해주는 inverse transformer이다. 수식 내의 수학적인 과정은 복잡하지만 결과적으로 전체 과정은 Auto-Correlation과 같은 의미를 가진다. 가장 주목해야 하는 것은 이러한 변환들을 통해 복잡도가 O(LlogL)로 줄어든다는 것이다.

*FT(Furier Transformation), FFT(Fast Furier Transformation)와 관련된 내용은 다른 포스트에서 다시 정리할 예정이다.

4. Experiments

4-1) Main Results

마지막 단락인 Experiments에서는 Autoformer의 성능과 기타 장점을 확인하기 위해 여러 실험을 진행한다.

본 논문의 실험에는 6 개의 벤치마크 데이터가 쓰였다. 데이터의 각 도메인은 energy, traffic, economics, weather and disease이다.

(with L2 loss function, ADAM optimizer, an initial learning rate of 10^-4, Batch size is set to 32, The training process is early stopped within 10 epochs)

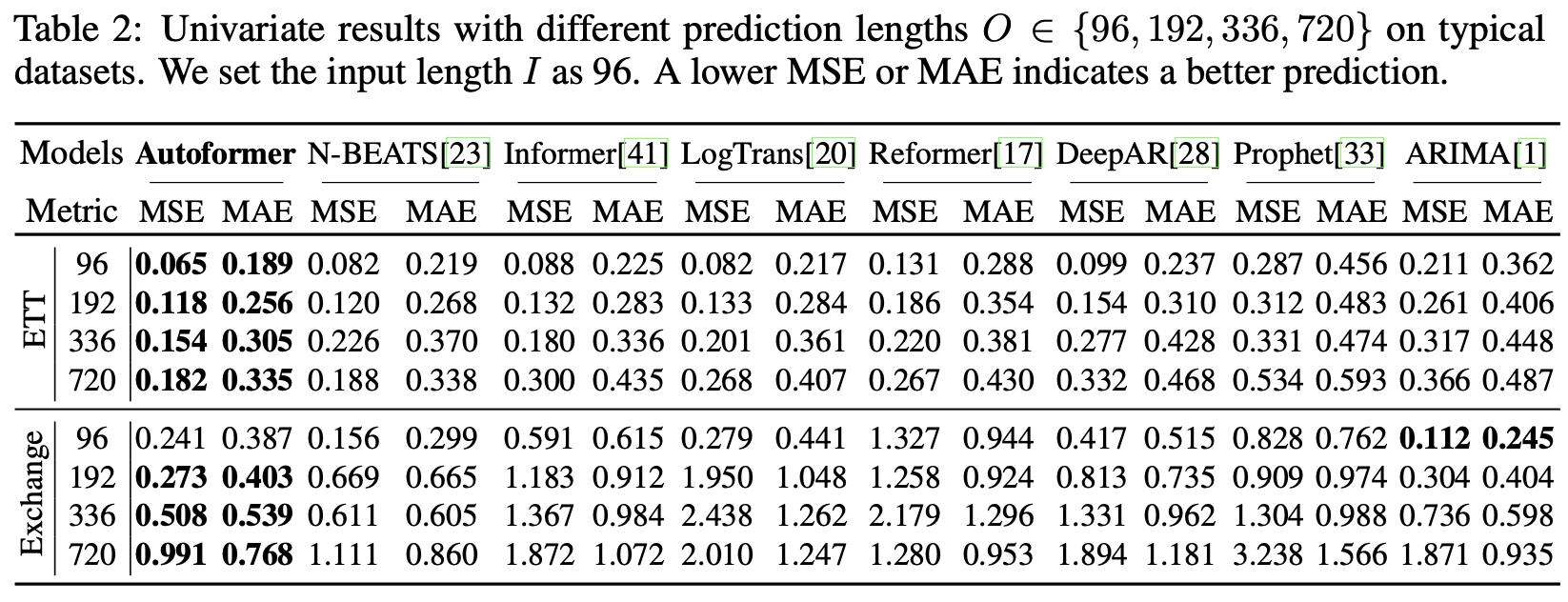

Multivariate results with different prediction lengths(96, 192, 336, 720)

Multivariate test 결과, 모든 벤치마크 데이터에서 Autoformer가 다른 모델들을 뛰어넘는 "sota"의 성능을 냈다. 이는 약 38%의 MSE 감소로, 모든 데이터와 아웃풋의 길이에서 성능향상을 보였다(long-term robustness). 한편, 더 눈에 띄는 결과는 특별한 주기성이 없는 데이터인 exchange(환율) 데이터에서도 가장 좋은 성능을 냈다는 것이다.

이는 긴 인풋에서도, 다양한 주기 등 복잡한 변동이 있는 실생활에서도 이 Autoformer가 좋은 결과를 낼 수 있다는 것을 시사한다.

Univariate results with different prediction lengths

이 실험은 주기성이 두드러지는 ETT와 그렇지 않은 Exchange 데이터로 진행되었는데, 다변수로 종속변수를 예측하는 Univariate results에도 Autoformer가 가장 뛰어난 성능을 보였다.

그런데, Exchange data에서 예측 아웃풋의 길이가 가장 짧을 때 ARIMA가 가장 뛰어난 성능을 보였다.

이 논문의 연구자들은 차분을 통해 비정상적인 경제 데이터의 단기 변동을 잘 잡아낼 수 있는 ARIMA의 장점이 돋보이지만, 긴 시점을 예측할수록 성능이 크게 감소하는 ARIMA의 한계점 또한 잘 보여주고 있다고 언급한다.

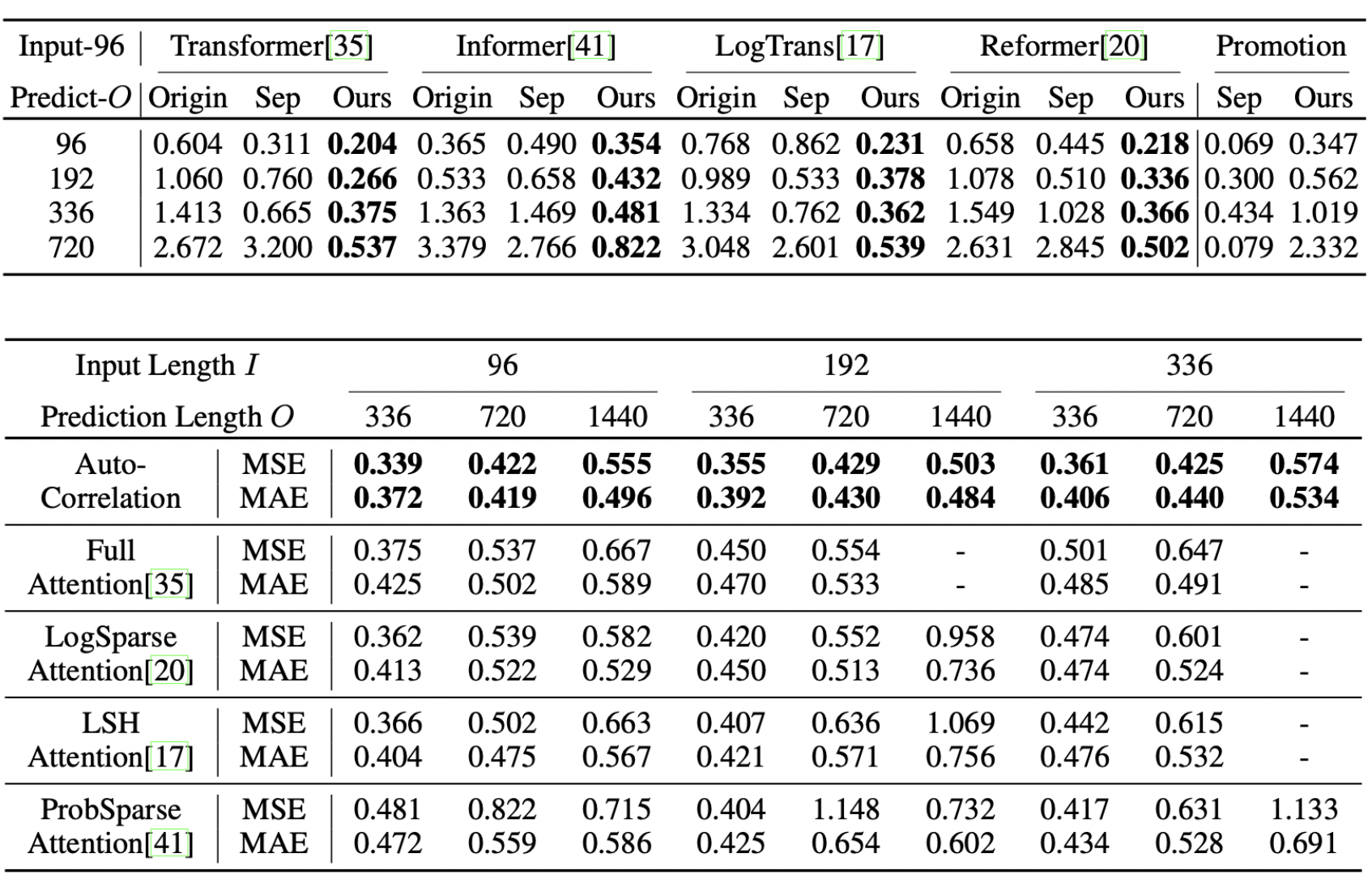

4-2) Ablation studies

이 연구에서는 Autoformer의 차별적인 특징인 "Series Decomposition"과 "Auto-Correlation"의 효과를 확인하였다.

맨 위 실험에서는 Origin(기본), Sep(사전에 분리하여 두 변동을 각각 예측), Ours(Autoformer의 아키테처)로 조건을 나누어 결과를 확인하였는데, Ours(Autoformer의 아키테처)의 결과가 가장 우수하였다.

2번째 실험에서는 Autoformer에 각각 다른 매커니즘을 적용한 결과이다.

마찬가지로 Auto-Correlation의 매커니즘이 가장 뛰어난 성능을 보였으며, "-"(out of memory), 즉, 메모리가 초과되는 결과없이 매우 긴 인풋과 아웃풋에도 좋은 결과를 보였다.

4-3) Model Analysis

마지막으로 연구자들은 Autoformer모델의 특성들을 실험하여 다양한 시사점을 도출하였다.

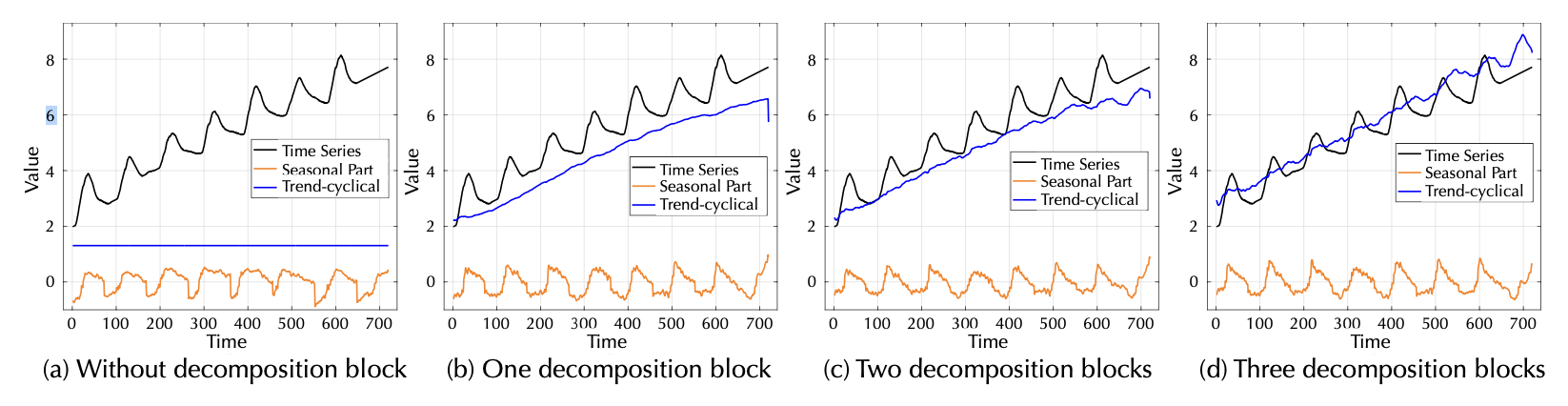

1. Time series decomposition

(a)를 보면 decomposition 블록이 없을 때 증가하는 trend와 seasonal 변동의 peak 지점을 제대로 포착하지 못하였다.

이후 블록을 늘려갈수록 모든 변동들을 더욱 잘 포착하고 있다는 것을 알 수 있다.

이는 Autoformer가 "왜 Decomposition 블록을 세 개 가지고 있어야 하는 지"를 시사하고 있다.

2. Dependencies learning

이 실험은 각 매커니즘들이 가장 마지막 timestep(감소하는 phase)과의 Dependency들을 추출한 series(a)나 point((b),(c),(d))를 보여준다.

(a)를 보면, Auto-Correlation이 다른 어텐션 매커니즘들과 달리 "전체 흐름 속에서" dependency를 더 "폭넓게", "정확하게" 찾았다.

이는 Auto-Correlation, 즉, series-wise한 방법이 전체 흐름을 더욱 잘 포착하며 information utilization 측면에서 더 효과적인 매커니즘이라는 것을 의미한다.

3. Complex seasonality modeling

Model Analysis의 마지막으로, 연구자들은 학습한 lags에 따른 밀도를 histogram으로 시각화하였다.

lag에 따른 밀도를 시각화한 결과, 이 히스토그램은 각 데이터의 시점에 따라 실생활의 seasonality 변동을 나타내고 있었다.

예를 들어 (a)는 하루의 시간인 24 lag까지 하루의 주기를 나타내고, 일주일을 나타내는 168lag(24*7)까지는 일주일의 주기를 나타내고 있다.

이는 즉, Autoformer가 단순한 예측의 결과뿐만 아니라 그 과정 속에서 변동의 주기를 잘 포착하고 있으며, 이를 시각화함으로써 인간이 해석할 수 있는 예측을 실현한다는 것을 의미한다.

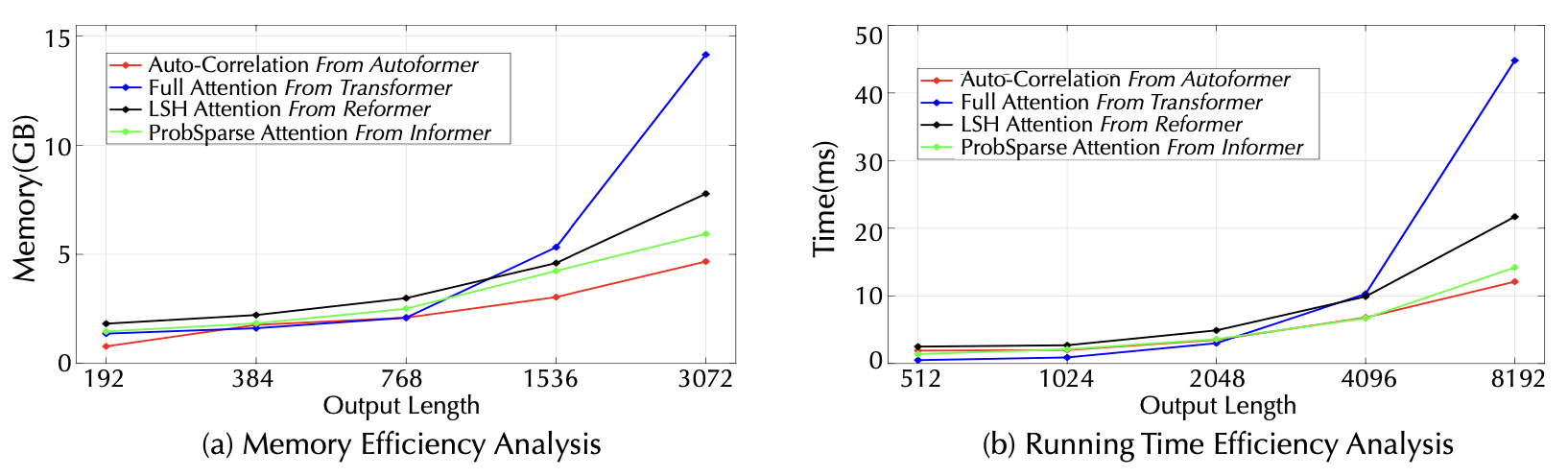

4. Efficiency analysis

이 Autoformer는 메모리 효율성, 시간 효율성 측면에서 타 모델들에 비해 더 좋은 결과를 냈다.

이는 O(Output Length)가 매우 길어지는 장기 시계열 예측문제에서 Autofomer가 단순한 성능뿐만 아니라 효율성 측면에서도 더 뛰어난 모델이라는 것을 입증하는 결과이다.

마치며

이 논문은 기존 트랜스포머 모델에 시계열의 특징을 추가한 변형 모델인 Autoformer를 제안한 논문이다.

트랜스포머의 등장 이후, self-attention을 장착한 트랜스포머 기반 모델은 기존 RNN, CNN 기반의 딥러닝 모델들의 성능보다 더 뛰어난 성능을 내고있다.

여러 트랜스포머 기반의 변형모델들은 장기(long-term, long-range) 시계열의 예측성능을 "효율적으로" 높이기 위해 attention module이나 그 architecture를 개조하여 좋은 성능을 입증하였다.

그러나, 이 논문은 지난 변형모델들과는 다르게 전통적인 "시계열 데이터의 특징을 입힌", "시계열 분석에 더욱 특화된" 모델인 Autoformer를 제안한다.

Autoformer의 차별적인 요소는 바로 "Decomposition"과 "Auto-Correlation"의 매커니즘을 이용한다는 것이다.

결과적으로 이 모델은 다른 모델 대비 가장 탄탄한 성능을 보여주는 등, 장기 시계열 Forecasting에서 "SOTA"성능을 달성하였고 앞으로 딥러닝에서 시계열이 어떠한 방향으로 나아가야 할 지, 그 가능성까지 시사하고 있는 중요한 논문이다.

PS. 이 논문은 트랜스포머에서 시계열 분석이 나아가야 할 방향을 제시해준 매우 중요한 논문이라고 생각합니다. 특히 여기서 처음 제시한 Decomposition 아키테처는 현재 연구 트렌드의 한 축을 이루고 있을 정도입니다. 너무나도 좋은 아이디어와 중요한 내용들이 포함된 논문이라 생각되어 더욱 자세히 리뷰하다보니 내용이 길어졌습니다. 긴 내용을 끝까지 읽어주신 분들께 감사의 말씀을 드립니다.

Paper 원문

https://arxiv.org/abs/2106.13008

Autoformer: Decomposition Transformers with Auto-Correlation for Long-Term Series Forecasting

Extending the forecasting time is a critical demand for real applications, such as extreme weather early warning and long-term energy consumption planning. This paper studies the long-term forecasting problem of time series. Prior Transformer-based models

arxiv.org