2023. 3. 20. 16:00ㆍ🧑🏻🏫 Ideas/(Advanced) Time-Series

이 논문은 시계열 분석에 트랜스포머를 적용해온 연구들을 정리한 논문이다.

시계열 분석은 NLP와 함께 sequential task의 대표적인 분야 중 하나로, 금융, 제조업, 마케팅 등 다양한 비즈니스 도메인에 응용되고 있는 분석 방법론이다.

2017년 트랜스포머의 등장 이후, NLP 분야에서 트랜스포머 알고리즘이 큰 성공을 이뤄나가면서 같은 sequential task인 시계열 분석에서도 이를 적용하려는 움직임이 일어났다. 특히 트랜스포머는 장기 종속성(long dependece)문제를 해결하여 긴 sequence에서 좋은 성능을 보인다는 것이 입증되었기 때문에, 시계열 분석에서도 장기 시계열을 효과적으로 학습할 수 있는 대안으로 주목을 받았다.

그러나 기본적인 트랜스포머 역시 여러가지 한계점이 존재했으며, 시계열 분석의 특성을 고려하여 모델을 개조할 필요성이 대두되었다.

이에 attention module부터 전체적인 architecture까지 기본적인 트랜스포머를 개조하려는 연구들이 활발하게 진행되었다.

그러한 흐름에서 이 논문은 2022년 당시까지 진행된 연구들을 종합, 정리하고 향후 연구의 방향을 제시해주고 있다.

Introduction

딥러닝에서 트랜스포머의 혁신은 NLP, CV, Speech processing에서의 훌륭한 퍼포먼스에 힘입어 학계의 큰 관심사가 되었다.

트랜스포머는 sequential data에서 long- range dependencies와 interactions을 포착하는 것에 뛰어난 성과를 보였는데, 이 점은 시계열 모델링 분야에도 큰 매력으로 다가왔다.

지난 몇년간, 시계열 분석의 여러 문제들(challenges)을 다루기 위해, 다양한 트랜스포머의 변형들이 제안되었고, 이들은 예측(Forecasting), 분류(Classification), 이상 탐지(Anomaly Detection)로 대표되는 여러 시계열 task에서 좋은 성과를 거두기도 하였다.

그러나, 여러 연구결과들은 "효과적으로" temporal dependency(시간 종속성)을 파악하는 일과 "계절성, 추세성" 등 시계열 데이터가 가지는 특징을 고려한 모델링은 여전히 문제(challenge)로 남아있다고 언급하고 있다.

이렇게 한계점을 극복해오며 트랜스포머를 시계열 분석에 적합한 모델로 개조하는 과정에서, 논문의 저자들은 지금까지 있었던 아이디어와 연구결과를 포괄적으로 정리하여 앞으로 이어질 연구에 시사점을 제시하는 것이 이 논문의 목적이라고 언급한다.

이어질 내용의 목차는 다음과 같다.

1. Brief introduction about vanilla Transformer

2. Taxonomy of variants of TS Transformer

2-1 Network modifications

- Positional Encoding

- Attention Module

- Architecture

2-2 Application domains

- Forecasting

- Anomaly Detection

- Classification

3. Experimental Evaluation and Discussion

4. Future Research Opportunities

1.Brief introduction about vanilla Transformer (Preliminaries of the Transformer)

본격적으로 시계열 분석에서 트랜스포머가 적용되어 온 과정을 정리하기 전에, 논문에서는 기본 트랜스포머의 요소를 간략하게 설명하고 있다.

본 논문에서 짚고 넘어가는 트랜스포머의 구조적 요소는 다음과 같다.

1. Positional Encoding (어텐션 이전에 위치정보를 넣어주는 과정)

2. Attention Module (self, multi-head 등 어텐션이 일어나는 레이어의 구조)

3. Architecture (Module들이 연결된 모양이나 구조)

기본(vanilla) 트랜스포머에 대한 설명은 아래 링크에 자세히 기술되어 있다.

트랜스포머 구조 참조

https://seollane22.tistory.com/20

Attention Is All You Need(2017) #Transformer

"Attention is all you need", 이 논문은 기존 seq to seq 모델의 한계점을 보완하고 성능을 크게 개선한 Transformer 모델의 등장을 알린 기념비적인 논문이다. 현재 NLP와 같이 seq to seq 형태의 데이터를 처리

seollane22.tistory.com

2. Taxonomy of Variants (Transformers in Time Series)

이 단락부터 본격적으로 시계열 트랜스포머의 연구를 요약하고 정리한다.

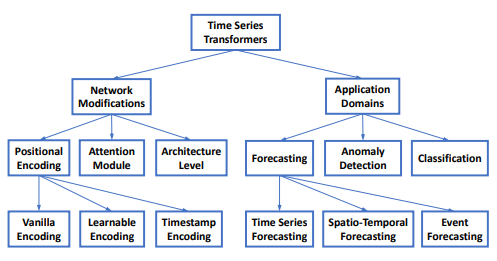

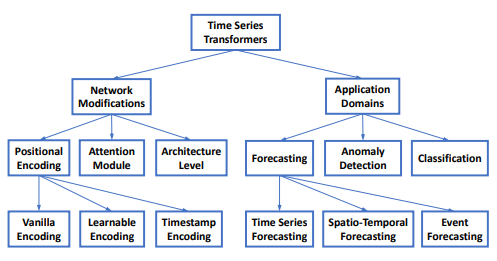

논문의 저자들은 시계열에서의 트랜스포머가 연구되어 온 과정의 설명하기 위해 위와 같은 분류를 제시한다.

이 논문의 흐름 또한 이 분류와 같은데, 먼저 크게 트랜스포머의 구조(Network)를 수정하는 것과 각 도메인에 따라 응용된 측면으로 분류된다.

2-1. Network Modification

먼저 시계열 분석에서 트랜스포머를 적용하려는 연구에는 그 구조(network)를 수정, 개조하는 관점이 있다.

이는 시계열 task의 여러 challenge들을 해결하기 위해 트랜스포머의 기본 구조를 수정하려는 데에 그 목적이 있다.

2-1-1. Positional Encoding

트랜스포머는 RNN 기반 모델들과 달리 정보를 순차적으로 처리하지 않기 때문에, 시계열 정보를 학습하기 위해서 인풋에 time order(순서)를 넣어주는 작업이 반드시 필요하다.

이러한 작업을 Positional Encoding이라고 하는데, 이는 위치 정보를 벡터로 인코딩하여 시계열 분석에 쓰일 인풋에 더해주는 과정이다.

그런데 시계열 모델링에 있어서 "어떻게 위치정보를 얻는 지"에 따라 다음과 같은 세 가지 분류가 있다.

- Vanilla Positional Encoding

Vanilla Positional Encoding은 기본적인(vanilla 한) 트랜스포머 모델에 쓰이는 작업을 그대로 이용하는 것이다.

이는 어떠한 상대적인 정보나 Time Event 정보를 포함하는 것이 아니라 인풋이 쓰여진 순서 그대로를 인코딩하여 인풋에 더해주는 작업이다. 이는 시간의 흐름을 담고있는 정렬된 데이터의 정보를 추출할 수 있지만, 시계열 데이터의 중요한 특징들을 충분히 뽑아내지 못한다고 볼 수 있다. (they were unable to fully exploit the important features of time series data)

- Learnable Positional Encoding

위 Vanilla Positional Encoding은 단지 데이터의 순서에 따라 함수를 적용하여 위치를 인코딩해주는데, 이러한 "hand-crafted"방법은 위치정보의 표현력이 떨어진다.

이에 여러 연구들은 "학습 가능한" positional embedding이 더 효과적이라는 것을 발견하기도 하였다.

이러한 방식은 기본 방식보다 더 유연하며, 특정 사건이 일어나는 시점을 학습하여 예측하는 등 여러 목적에 맞게 적용할 수 있는 장점이 있다.

이와 관련해서 논문에서 소개하는 구체적인 연구들은 아래와 같다.

[Zerveas et al., 2021]의 연구는 다른 모델의 파라미터와 임베딩 벡터의 위치 인덱스를 함께 학습할 수 있는 embedding layer를 도입하였다.

[Lim et al., 2021]의 연구는 시계열 데이터의 sequential order을 더 잘 추출할 수 있는 LSTM의 네트워크를 도입하였다.

- Timestamp Encoding

실생활의 시나리오를 시계열 데이터로 모델링 할 때 타임스탬프 정보가 가장 접근, 추출하기 용이하다.

타임스탬프는 달력에 기입하는 시간 정보(일, 주말, 월, 연도 등)나 어떠한 특정한 이벤트(거래 마감일, 세일 기간)와 같이 시계열에서의 주기적인 포인트를 의미한다.

이러한 타임스탬프는 실생활의 응용에서 매우 유의미할 때가 많지만 기본 트랜스포머 모델에서는 이와 같은 포인트를 이용하지 못해왔다.

이에 다양한 변형 모델들이 positional encoding 과정에서 이를 이용하고자 시도하였다.

Informer [Zhou et al., 2021]

이는 트랜스포머의 모듈, 아키테처(구조)를 시계열 분석에 용이하도록 전방위적으로 개조한 모델인데, 이 모델을 제안한 논문에서는 인코딩 과정에서 학습가능한 임베딩 레이어를 추가하여 이 타임스탬프를 학습하게 하는 방법을 제안하였다.

또한 마찬가지로 Autoformer [Wu et al., 2021] and FEDformer [Zhou et al., 2022]에서도 비슷한 타임스탬프 인코딩 방식을 추가하였다.

2-1-2. Attention Module

시계열 트랜스포머에서 Network modification의 2번째 항목은 Attention Module이다.

여러 연구자들은 postional encoding 이외에도 트랜스포머의 기본구조가 가지고 있는 한계점을 보완하고, 시계열 분석의 여러가지 challenge들을 다루기 위해 "어텐션 모듈"을 수정하려는 연구를 이어왔다.

어텐션 모듈(특히 self attention)은 트랜스포머의 핵심 요소로서 input 전체를 훑으며 유사도를 기반으로 한 가중치를 생성한다. 이는 마치 완전 연결된 신경망과 같이 maximum path length를 공유하며 long-range dependency를 효과적으로 파악하는 장점이 있다.

그러나 이러한 장점에는, 계산적 복잡도가 sequence length(N)의 제곱이라는 큰 비용이 따라온다.

quadratic(제곱의) complexity라고 불리는 이 큰 복잡도는 computational bottleneck(병목현상)을 야기하여 메모리 효율성을 떨어트리는 문제가 있다.

이러한 문제는 N이, 시계열이 더욱 장기로 갈수록 커지기 때문에 많은 연구들이 이를 개선하여 효율성을 높이려는 연구를 진행하였다.

이렇게 트랜스포머의 어텐션 모듈을 효율화하는 연구는 크게 Sparsity bias를 도입하는 것과 Low-rank property를 고려하는 것으로 나뉜다.

Introducing "Sparsity bias" into the attention mechanism

트랜스포머 어텐션 모듈의 계산 복잡도를 완화하는 첫 번째는 대안으로 Sparsity bias가 제안되었다.

Sparsity bias는 보통 과적합을 방지하거나 계산이 지나치게 많아지는 것을 막기 위해 도입하곤 하는데, 어텐션에서도 마찬가지로 계산 복잡도를 완화하기 위하여 이를 도입하려는 시도가 있었다.

이는 완전 연결된 attention,즉, 모든 포지션에 대해 유사도를 구하는 것이 아니라 Sparsity bias를 통한 포지션만 계산에 이용하는 것이다.

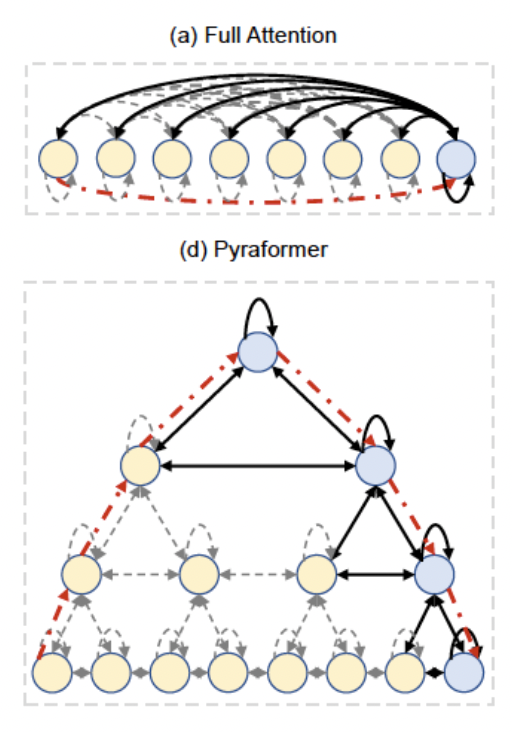

아래의 그림은 이러한 아이디어를 잘 이해하도록 돕는다.

(a)는 기본적인 트랜스포머에서 수행하는 self-attention이다. 이와 달리, 나머지 모형에서는 전체가 아닌 편향을 통해 선별한 포지션에만 계산을 수행하여 계산 복잡도를 완화한다.

아래와 같은 트랜스포머 변형모델들에서 Sparsity Bias를 제안하였다.

- LogTrans [Li et al., 2019]

- Pyraformer [Liu et al., 2022a]

Exploring the low-rank property of the self-attention

트랜스포머를 효율화하기 위해 어텐션 모듈을 수정하는 두 번째 방법은 바로 Low-rank property를 찾아 이를 계산에서 제외하는 것이다.

이와 같은 방식을 제안한 트랜스포머 변형모델은 다음과 같다.

- Informer [Zhou et al., 2021]

- FEDformer [Zhou et al., 2022]

특히 이 아이디어를 먼저 제안하고 구현한 Informer에서는 이러한 방식을 도입해야 하는 이유를 논리적으로 설명하고 있다.

Informer 논문의 저자는 위에서 다룬 첫 번째 방법인 "Sparsity bias를 도입하는 방식"은 인간의 주관이 개입되는 휴리스틱한 방법이라고 말한다.

따라서 "선택적인 계산"에 있어서 조금 더 과학적인 접근으로 수식을 통해 중요도를 계산하여 더 높은 중요도를 가지는 것들만 계산에 이용하는 방식이 더 합리적이라고 언급한다. Informer에서는 이러한 방식을 "ProbSparse Attention"이라고 명명하였다.

결과적으로 이러한 방식은 기본적인 트랜스포머 모델에 비해 더 빠른 계산 속도를 달성하였다.

본 논문은 이 단락의 마지막에서 각종 변형 모델들과 그 복잡도를 비교하여 정리하고 있다.

위 결과는 어텐션 모듈을 수정한 변형 모델들이 quadratic complexity를 가지는 기본적인 트랜스포머에 비해 더 완화된 복잡도를 가진다는 것을 보여준다.

2-1-3. Architecture-based Attention Innovation

시계열 트랜스포머의 발전에서 Network modification의 마지막은 Architecture을 수정하는 것이다.

앞선 Network modification의 두 번째 방법이었던 어텐션 모듈을 개조하는 것과 다르게, 이것은 어텐션 모듈을 연결하는 구조를 수정하는 것이다.

본 논문에서 언급하길, 최근 연구들은 시계열 분석을 위한 트랜스포머에 hierarchical architecture(계층 구조)를 도입하고 있다고 한다.

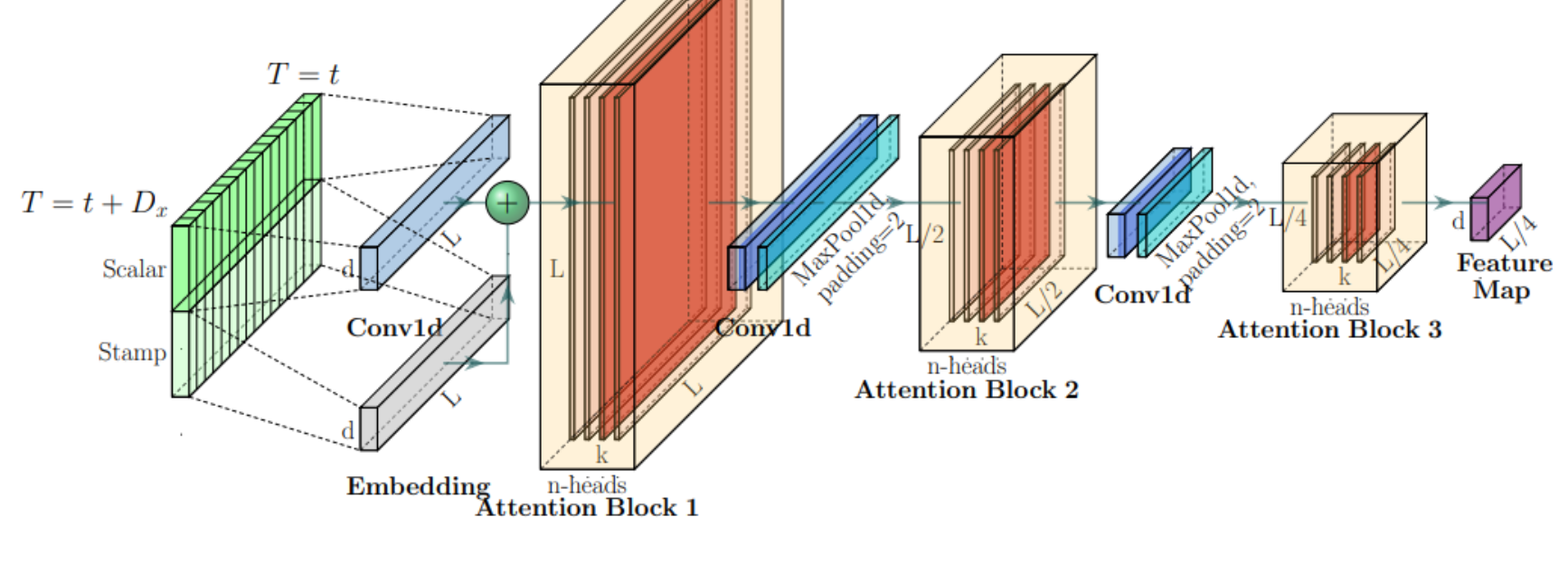

- Informer [Zhou et al., 2021]

Informer를 제안한 논문에서는 어텐션 모듈들 사이에 max-pooling layer를 도입하는 architecture를 제안하였다.

이는 중요한 정보만 추출하여 전달하기 위함으로, sample series를 절반으로 줄여 메모리 사용량을 줄이는 효과 또한 가져온다.

해당 논문에서는 이를 "Knowledge Distilling(지식 증류)"이라고 표현한다.

- Pyraformer [Liu et al., 2022a]

pyraformer를 제안한 논문에서는 트랜스포머의 새 architecture로 C-ary tree-based attention mechanism을 디자인 하였다.

이는 피라미드형 어텐션이라고 불리기도 하는데, intra attention와 inter attention 두 가지를 동시에 구축한 것이 특징이다.

즉, 이 구조는 내부적인 어텐션과 외부적인 어텐션을 모두 수행하며 학습을 해나가는데, 이를 통해 different resolutions 간의 시간적 종속성을 효과적으로 파악하며 효율적인 계산까지도 가능하게 하였다.

2-2. Application Domain

지금까지 논의한 network modification 이외에도 시계열 트랜스포머를 연구하는 큰 방향에는 Application Domain이 있다.

위 그림의 오른쪽 가지처럼, 시계열 task의 domain은 크게 Forecasting, Anomaly Detection, Classification이 있다.

2-2-1. Forecasting

Forecasting, 예측 문제는 시계열 분석의 가장 기본이자 major한 분야이다.

이 예측 문제를 다루는 데 있어서 트랜스포머의 주된 방향은 Module-level과 Architecture-level의 변형모형을 만드는 것이었다.

이는 앞서 다룬 Network Modification의 어텐션 모듈과 아키테처를 개조하는 그 방향과 같다.

예측 성능의 진보와 계산과정의 효율화를 달성하기 위해 이러한 두 방향으로 여러 연구들이 이루어지면서 많은 변형 트랜스포머 모형들이 제안되었다.

본 논문에서는 정리한 모형들은 다음과 같다.

1-1) New Module Design

Sparsity inductive bias or low-rank approximation 도입

- LogTrans [Li et al., 2019]

- Informer [Zhou et al., 2021]

- AST [Wu et al., 2020a]

- Pyraformer [Liu et al., 2022a]

- Quatformer [Chen et al., 2022]

- FEDformer [Zhou et al., 2022]

이 모델들이 추구하는 목표와 그 이유는 위 network modification에서 논의한 것과 같다.

이들은 "장기 시계열"을 예측하는 문제에서 Sparsity inductive bias와 low-rank approximation를 통해 "메모리 효율화"와 "계산 속도의 향상"을 이루어냈다.

1-2) Modifying the normalization mechanism

over-stationarization(과대 정상화) 문제 해결

- Non-stationary Transformer [Liu et al., 2022b]

1-3) Utilizing the bias for token input

Segmentation-based representation mechanism.(인풋 토큰의 편향을 최대한 이용하기)

Simple "Seasonal-Trend Decomposition architecture" with an auto-correlation mechanism (시계열 데이터의 특성 반영)

- Autoformer [Wu et al., 2021]

Autoformer의 구조는 다른 시계열 트랜스포머 변형모델들과는 다르게, 전통적인 시계열 분석 방법을 포함하고 있다.

이 모델은 대부분의 시계열 데이터가 필연적으로 가지게 되는 자기상관성(Auto-Correlation)과 Seasonal(계절변동), Trend(추세변동)의 개념을 적합하여 매우 효과적인 분석 매커니즘을 구축하였다.

한편 Forecasting에 있어서 트랜스포머는 위와같은 수치적인 예측 문제 외에도 Spatio-Temporal Forecasting, Event Forecasting와 같은 분야에서도 많이 연구되고 있다.

2-2-2. Anomaly Detection

트랜스포머는 시계열 이상탐지에서도 좋은 성능을 보여준다.

- 트랜스포머와 neural generative model을 결합한 모델

1. TranAD [Tuli et al., 2022]

이 모델은 기본적인 트랜스포머가 이상치의 작은 편차를 놓치는 것을 해결하기 위해 "adversarial train"(적대적 훈련)을 통해 recostruction error를 증폭하여 학습하게 한다.

2. MT-RVAE [Wang et al., 2022]

3. TransAnomaly [Zhang et al., 2021]

이 두 연구는 공통적으로 트랜스포머와 VAE(Variational Auto Encoder)를 결합하였는데, 그 목적은 서로 다르다.

MT-RVAE [Wang et al., 2022]는 더 많은 병렬화와 training cost 감소를 위해 VAE를 결합하였고, TransAnomaly [Zhang et al., 2021]는 각 다른 스케일의 시계열의 정보를 효과적으로 결합하고 추출하기 위한 목적을 가진다.

4. GTA [Chen et al., 2021c]

이 연구는 트랜스포머와 graph-based learning architecture를 결합하였다.

2-2-3. Classification

트랜스포머는 long-range dependency를 포착하는 데 좋은 성능을 가지고 있기 때문에, 시계열 분류에도 매우 효과적이다.

GTN [Liu et al., 2021]

이 연구는 Two-Tower Transformer라는 모델을 제안하였는데, 각 타워는 "Time-Step-Wise Attention", "Channel - Wise Attetntion"을 수행한다. 이 때 두 타워의 특성을 합치기 위해 "Gating"이라고도 불리는 "a learnable weighted concatenation"가 이용된다. 이 모델은 시계열 분류 문제에서 sota 성능을 달성했다고 한다.

[Rußwurm and Korner, 2020]

이 연구는 raw optical satellite time series classification이라는 개념을 구축하고 self-attention기반 트랜스포머를 제안한다.

[Yuan and Lin, 2020]

[Zerveas et al., 2021]

[Yang et al., 2021]

이 연구들은 시계열 분류에 있어서 Pre-trained Transformers, 즉, 사전학습된 트랜스포머를 도입하였다.

3. Experimental Evaluation and Discussion

저자들은 시계열 트랜스포머를 요약, 정리하는 것의 마지막으로 각 변형모델들을 비교하며 여러가지 테스트를 수행하였다.

테스트에 쓰인 데이터는 시계열 분석에서 유명한 벤치마킹 데이터인 ETTm2 [Zhou et al., 2021] 데이터이다.

고전적인 통계모델인 ARIMA나 CNN, RNN과 같은 기존의 딥러닝 모델들은 이미 Informer [Zhou et al., 2021]에서 트랜스포머에 비해 열등한 성능을 가졌다는 것이 입증되었기 때문에, 본 논문에서는 트랜스포머 변형모델들에 집중하고 있다.

3-1) Robustness Analysis

Table 2에서 알 수 있는 결과를 요약하면 다음과 같다.

- 대체적으로 Vanilla Transformer에 비해 변형모델들, 특히 Autoforemr의 성능(Forecasting Power)이 더 뛰어나다.

- 모든 모델들이 Input Len이 클 수록, 즉, 장기 시계열로 갈수록 성능이 하락하는 추세를 보인다.

"전통적인 시계열 분석의 특징을 입힌" Autoformer의 성능이 가장 좋다는 것은 의미있는 시사점을 던져주고 있다.

이에, 기존 트랜스포머의 구조에 전통적인 시계열 분석의 방법론이나 시계열 데이터의 특성을 결합하려는 연구를 지속해야 한다.

또한 여전히 긴 Input에서까지 강력한 성능을 보이는 모델이 부족하기 때문에, 더 긴 시계열의 Time-dependency를 포착할 수 있는 방안을 계속 고민해야 한다.

3-2) Model Size Analysis

NLP나 CV에서 트랜스포머가 좋은 성능을 보인 요인 중 하나가 바로 모델의 크기를 매우 크게 늘릴 수 있었기 때문이다.

특히 Layer의 개수로 그 크기를 조정하는데, NLP, CV에서는 보통 12개에서 128개 사이의 수를 선택하는 것이 일반적이다.

Table 3의 결과를 요약하면 다음과 같다.

- 3~6 개의 Layer가 가장 좋은 성능을 보인다.

- Layer 수를 늘린다고 해서 성능이 오르지 않는다.

테스트 결과, 다른 분석방법과는 달리 시계열 트랜스포머에서는 더 큰 모델의 capacity가 더 좋은 성능을 보장하지는 않는다는 것이 밝혀졌다.

이에 향후 원인을 규명하고, 더 Deep한 Layer들을 통해 성능을 확장할 Architecture를 디자인 하는 것이 중요한 연구 방향이 될 것이다.

3-3) Seasonal-Trend Decomposition Analysis

최근에 [Wu et al., 2021; Zhou et al., 2022; Lin et al., 2021; Liu et al., 2022a] 등의 연구자들은 시계열의 여러 변동을 Decomposition(요소분해)하는 것이 트랜스포머의 성능에 핵심이 될 수 있다는 것을 제시하고 있다.

위 Table 4는 Original version의 성능과, Autoformer [Wu et al., 2021]에서 제안한 simple moving average seasonal-trend decomposition architecture를 적용한 성능을 비교한 결과이다.

맨 오른쪽 promotion 열을 보면, Decomposition을 적용한 모델이 기본 버전보다 최소 50%에서 최대 80% 정도로 성능을 끌어올렸다. 이러한 결과는 앞으로 시계열 트랜스포머의 성능에 있어서 이 decomposition이 매우 핵심적인 요소가 될 가능성이 높다는 것을 시사한다.

이에 본 논문에서도 이 점에 주목하여 앞으로의 연구를 통해 더 발전된 시계열 decomposition 스키마를 디자인 하는 것이 매우 중요할 것이라고 강조하고 있다.

4. Future Research Opportunities

본 논문은 마지막으로 지금까지 시계열 트랜스포머를 정리해온 결과를 바탕으로 시사점과 앞으로의 연구 방향을 제시해주고 있다.

1. Inductive Biases for Time Series Transformers

- 노이즈를 통제하고 효율적으로 신호를 추출하라. (based on understanding Time-Series Data and tasks)

2. Transformers and GNN(Graph-neural-network) for Time Series

- 수치예측 모델 뿐만 아니라 효율적인 상태-공간 모형 개발을 위해서 GNN을 결합하라.

3. Pre-trained Transformers for Time Series

- 각 태스크, 도메인에 따른 적절한 사전 학습 모델을 구축하라.

4. Transformers with Architecture Level Variants

- 현재 대부분의 연구는 모듈을 수정하는 것에 집중되어 있다. 트랜스포머의 architecture(구조)를 시계열에 맞게 디자인할 필요가 있다.

5. Transformers with NAS for Time Series (Neural architecture search (NAS) )

- 현재 트랜스포머의 하이퍼 파라미터들을 시계열 분석에 최적화해야 한다.

(embedding dimension, number of heads(병렬 수), and number of layers)

마치며

이 논문은 "트랜스포머를 시계열 분석에 적용해보면 어떨까?"라는 필자의 일차적이고 막연한 궁금증을 해소해주었음은 물론, 지금까지의 트렌드와 향후 연구 방향들까지 그 시야를 넓힐 수 있는 좋은 계기가 되었다.

따라서 이 논문을 먼저 리뷰하는 것은 지금까지 발표된 트랜스포머의 변형들을 하나하나 자세히 살펴보기 전에 전체적인 흐름과 방향을 먼저 짚어주는 의미를 가질 것이라 기대한다.

지나쳐온 과정들, 이 논문에서 언급하는 여러 변형들은 이어지는 다른 논문 리뷰에서 자세하게 다룰 예정이다.

원문

https://arxiv.org/abs/2202.07125

Transformers in Time Series: A Survey

Transformers have achieved superior performances in many tasks in natural language processing and computer vision, which also triggered great interest in the time series community. Among multiple advantages of Transformers, the ability to capture long-rang

arxiv.org