1. Introduction

대규모 언어 모델(LLM)은 뛰어난 능력을 보여주지만, 여전히 치명적인 단점들을 안고 있다. 특히 학습 데이터에 없는 최신 정보를 모른다거나 응용 상황에서 기업 내 정보를 참조할 수 없다. 이러한 지식 cut-off(단절) 문제는 결과적으로 LLM으로 하여금 사실이 아닌 내용을 사실처럼 말하는 환각(Hallucination) 현상을 유도한다. 또한, 모델이 내놓는 답의 추론 과정을 투명하게 알기 어렵다는 단점도 지적된다.

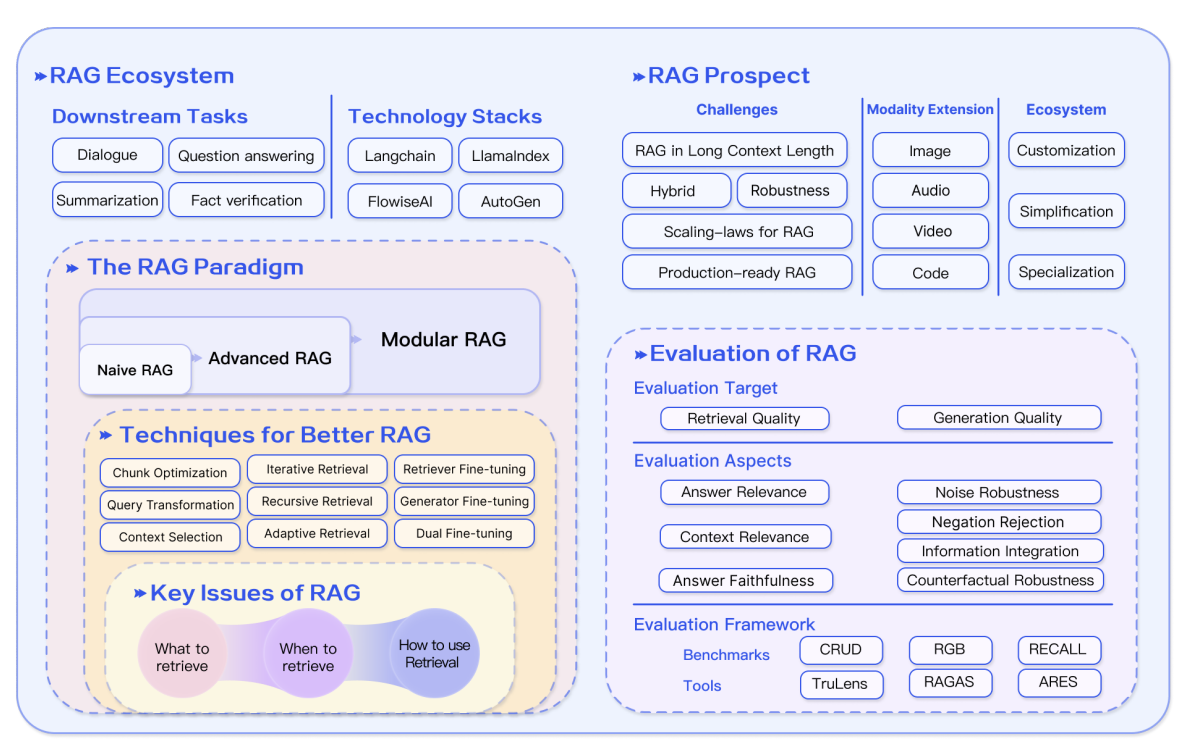

이러한 문제를 해결하기 위해 등장한 것이 바로 RAG(Retrieval-Augmented Generation, 검색 증강 생성)이다. RAG는 LLM이 내부의 파라미터 지식에만 의존하는 것이 아니라, 외부 데이터베이스에서 관련 지식을 찾아와 이를 바탕으로 답변을 생성하게 한다. 이를 통해 답변의 정확도와 신뢰성을 높이고, 지속적인 지식 업데이트가 가능해진다. 이 논문은 RAG 기술이 어떻게 발전해왔는지를 한눈에 보여주는 기술 트리(Technology Tree)를 제시하고 있다.

위 그림(Fig. 1)에서 볼 수 있듯이, RAG 연구의 궤적은 크게 세 단계로 진화해 왔다.

- Pre-training (사전 학습 단계): 초기에는 Transformer 구조의 등장과 함께 사전 학습 모델(PTM)에 추가적인 지식을 주입하는 연구가 주를 이뤘다.

- Inference (추론 단계): ChatGPT 이후, LLM의 강력한 In-Context Learning(ICL) 능력을 활용하여 추론 시점에 더 나은 정보를 제공하는 연구가 폭발적으로 성장했다.

- Fine-tuning (미세 조정 단계): 최근에는 검색된 정보를 단순히 활용하는 것을 넘어, RAG 프로세스 자체를 LLM의 미세 조정과 결합하여 성능을 극대화하는 방향으로 나아가고 있다.

2. Overview of RAG

1. RAG의 작동 프로세스

전형적인 RAG의 과정은 사용자의 질문에 대해 외부 데이터베이스에서 관련 문서 청크(Chunk)를 검색(Retrieval)하고, 이를 질문과 결합하여 LLM이 답변을 생성(Generation)하는 구조다. 이를 통해 LLM은 학습 데이터에 없는 최신 정보를 반영하고, 환각(Hallucination)을 줄이며 답변의 신뢰도를 높일 수 있다.

2. RAG 패러다임의 진화 (RAG Paradigm)

A. Naive RAG (Retrieve - Read Framework)

Naive RAG는 ChatGPT의 등장 직후 널리 채택된 가장 초기의 연구 패러다임으로, 전통적인 "Retrieve-Read" 프레임워크를 따른다.

- 프로세스:

-

Indexing (인덱싱): PDF, HTML 등 다양한 포맷의 문서를 텍스트로 추출하고 청크(Chunk) 단위로 잘라 구성한 뒤, 임베딩 모델을 통해 벡터로 변환하여 벡터 데이터베이스에 저장한다.

-

Retrieval (검색): 사용자의 질문을 벡터화하여 데이터베이스 내의 청크들과 유사도(Similarity)를 계산하고, 가장 관련성이 높은 상위 K개의 문서를 가져온다.

-

Generation (생성): 검색된 문서를 질문과 결합하여 프롬프트를 구성하고, 이를 LLM에 입력하여 최종 답변을 생성한다.

-

- 한계점 (Limitations): 원문에서는 Naive RAG가 가지는 한계를 세 가지 측면에서 구체적으로 지적한다.

-

Retrieval Challenges: 검색의 정확도(Precision)와 재현율(Recall)이 낮아, 관련 없는 문서를 가져오거나 중요한 정보를 놓치는 경우가 발생한다.

-

Generation Difficulties: 모델이 검색된 정보에 기반하지 않고 허구의 내용을 답하는 환각(Hallucination) 문제가 여전하며, 답변의 편향성이나 독성 문제가 발생할 수 있다.

-

Augmentation Hurdles: 검색된 정보들이 서로 충돌하거나 중복될 때 이를 매끄럽게 통합하지 못하여, 답변이 앞뒤가 안 맞거나(Disjointed) 불필요하게 반복되는(Repetitive) 문제가 발생한다.

-

B. Advanced RAG: Optimization for Quality

Advanced RAG는 Naive RAG의 검색 품질과 생성 품질 문제를 해결하기 위해 검색 전(Pre-retrieval)과 검색 후(Post-retrieval) 과정을 최적화하는 전략을 도입한다.

- Pre-retrieval Process (검색 전 최적화):

- Indexing Optimization: 데이터의 품질을 높이기 위해 슬라이딩 윈도우(Sliding window) 방식이나 더 세밀한 청킹(Fine-grained segmentation)을 적용하고, 메타데이터(Metadata)를 추가하여 인덱싱 구조를 개선한다.

- Query Optimization: 사용자의 질문을 그대로 쓰지 않고, 쿼리 재작성(Rewriting), 변환(Transformation), 확장(Expansion) 등의 기법을 통해 검색에 최적화된 형태로 다듬는다.

- Post-retrieval Process (검색 후 최적화):

- Rerank (재순위화): 검색된 문서들 중 가장 관련성 높은 정보가 프롬프트의 앞이나 뒤(Edges)에 위치하도록 순서를 재조정한다. 이는 LLM이 긴 문맥의 중간에 있는 정보를 망각하는 'Lost in the middle' 현상을 방지하기 위함이다.

- Context Compression (문맥 압축): 모든 정보를 다 넣으면 정보 과부하가 걸리므로, 불필요한 내용을 제거하고 핵심 정보만 압축하여 LLM에 전달한다.

C. Modular RAG: Flexibility and Versatility

Modular RAG는 기존의 선형적인 구조를 탈피하여, 다양한 기능 모듈을 레고 블록처럼 조립할 수 있는 가장 진보된 형태이다.

- New Modules (새로운 모듈의 도입): 단순 검색 외에 특화된 기능을 수행하는 모듈들이 추가되었다.

- Search Module: 특정 시나리오(데이터베이스, 지식 그래프 등)에 맞춰 검색 엔진이나 코드를 이용해 직접 검색을 수행한다.

-

RAG-Fusion: 사용자의 쿼리를 여러 관점으로 확장(Multi-query)하여 검색하고, 이를 다시 통합하여 답변의 다양성을 확보한다.

-

Memory Module: LLM의 메모리 기능을 활용하여 검색을 가이드하고, 지속적으로 지식을 업데이트하는 무제한 메모리 풀을 생성한다.

- Routing: 질문의 종류에 따라 요약이 필요한지, 특정 DB 검색이 필요한지 등 최적의 경로를 설정한다.

- Predict: 검색 대신 LLM이 직접 맥락을 생성하여 중복과 노이즈를 줄인다.

- New Patterns (새로운 패턴의 적용): 정해진 순서(Retrieve -> Read)가 아니라 유연한 흐름을 가지기도 한다.

-

Rewrite-Retrieve-Read: 검색 전에 쿼리를 먼저 재작성한다.

-

Generate-Read: 검색 대신 LLM의 생성 능력을 먼저 활용한다.

-

Hybrid Retrieval: 키워드 검색과 시맨틱 검색을 혼합하여 사용한다.

-

DSP (Demonstrate-Search-Predict): 문맥 내 학습(In-Context Learning)을 강화하기 위해 예시를 보여주고 검색하고 예측하는 프레임워크이다.

- Iterative & Adaptive: ITER-RETGEN처럼 검색과 읽기를 반복하거나, Self-RAG처럼 검색이 필요한 순간을 모델이 스스로 판단(Adaptive)하여 효율성을 극대화한다.

-

초기 RAG가 일직선의 파이프라인이었다면, Modular RAG는 레고 블록처럼 조립 가능한 에코시스템으로 변모했다는 점이 흥미롭다. 이는 RAG가 단순한 기술을 넘어 하나의 거대한 아키텍처로 진화하고 있음을 시사한다.

3. RAG vs Fine-tuning "RAG를 쓸까, 파인 튜닝을 할까?"는 현업의 고민거리이다. 이를 비유하면 아래와 같다.

- RAG: 모델에게 맞춤형 교과서(Textbook)를 쥐여주고 정보를 찾아보게 하는 것과 같아, 정밀한 정보 검색에 유리하다. 또한, 파라미터 튜닝을 위한 컴퓨팅 자원과 데이터, 시간적 비용이 적기 때문에 효율성에서 큰 우위를 가진다.

- Fine-tuning: 학생이 지식을 내면화(Internalizing)하여 시험을 보는 것과 같아, 특정 형식이나 스타일을 학습하는 데 유리하다. 이는 기존 LLM의 강점인 보편성/일반화된 지식을 포기하는 대신, 특정 작업에 더 전문적인 활용이 가능하게 한다.

결론적으로 두 기술은 상호 배타적인 것이 아니라 상호 보완적이며, 최적의 성능을 위해서는 함께 사용하는 것을 고려하는 것이 적절하다.

3. Retrieval

RAG의 성능은 결국 "얼마나 관련 있는 문서를 정확하게 찾아오느냐"에 달려있다. 이 섹션에서는 검색 품질을 높이기 위한 다양한 시도들을 다룬다. 검색 소스의 확장부터 인덱싱, 쿼리 최적화, 그리고 임베딩 모델의 튜닝까지, '원하는 정보를 정확히 찾아오기 위한' 모든 기술적 시도들이 이어져왔다.

1. 검색 소스 (Retrieval Source): 검색의 대상이 되는 데이터는 형태와 입도 내지는 밀집도(Granularity)에 따라 RAG의 성능에 지대한 영향을 미친다.

-

반정형 데이터 (Semi-structured): PDF처럼 텍스트와 표가 섞인 데이터는 텍스트 분할 시 표가 깨지는 문제가 있다. 이를 해결하기 위해 표를 텍스트로 변환하거나, LLM의 코딩 능력을 빌려 Text-to-SQL로 처리하는 방식이 시도되고 있다.

-

구조화 데이터 (Structured): 지식 그래프(Knowledge Graph, KG)는 검증된 정보를 제공한다는 점에서 매우 유용하다. KnowledGPT나 G-Retriever 같은 연구는 KG에서 정확한 사실 관계를 추출해 LLM의 환각을 줄이려고 시도한다.

-

LLM 생성 콘텐츠 (LLMs-Generated Content): 역발상으로, 외부 데이터가 아닌 LLM이 스스로 생성한 지식을 검색 소스로 쓰기도 한다. GenRead는 검색기 대신 LLM 생성기를 사용하여 문맥을 만들고, Selfmem은 생성된 답변을 다시 메모리 풀에 저장해 스스로를 강화한다.

- 데이터 구조의 확장: 초기에는 위키피디아 같은 비정형 텍스트(Unstructured Data)가 주류였으나, 점차 그 범위가 넓어지고 있다.

- 검색 입도 (Retrieval Granularity): 데이터를 어느 크기로 잘라서 검색할지 결정하는 것은 매우 중요하다. 토큰, 문구, 문장, 문서 등 다양한 단위가 있지만, 최근에는 '명제(Proposition)' 단위가 주목받고 있다. Proposition 청크를 제안한 DenseX~는 텍스트를 고유한 사실(Fact)을 담은 최소 단위인 명제로 쪼개어 검색의 정확도를 높였다.

2. 인덱싱 최적화 (Indexing Optimization) 문서를 검색 가능한 형태로 저장하는 인덱싱 단계는 검색 품질을 좌우하는 '기초 공사'입니다.

- 청킹 전략 (Chunking Strategy): 단순히 100자, 500자 등 고정된 크기로 문서를 자르면 문맥이 끊길 위험이 있다. 이를 보완하기 위해 슬라이딩 윈도우(Sliding Window)를 쓰거나 임베딩 모델을 활용하는 Semantic Chunker와 같은 전략을 사용한다. 한편 Small2Big은 검색은 작은 문장 단위(Small)로 하되, LLM에게는 그 문장이 포함된 더 큰 문맥(Big)을 제공하여 정밀도와 문맥 이해도를 동시에 잡는 기법이다. 즉, 검색과 추론에 쓰이는 데이터를 분리하는 것이다.

- 메타데이터 부착 (Metadata Attachments): 청크에 파일명, 저자, 타임스탬프 등을 태깅하여 필터링에 활용한다. 특히 Reverse HyDE라는 기법이 흥미로운데, 문서 내용을 바탕으로 '이 문서가 답변할 수 있는 가상의 질문'을 LLM으로 생성하여 메타데이터로 저장한다. 나중에 사용자가 질문을 던지면, 이 가상의 질문과 매칭되어 검색 확률을 높인다.

- 구조적 인덱스 (Structural Index): 문서를 부모-자식 노드의 계층 구조로 저장하거나, 지식 그래프(KG)를 활용해 문서 간의 연결성을 보존한다. 이는 LLM이 단편적인 정보 조각이 아니라, 정보의 구조적 맥락까지 이해하도록 돕는다.

3. 쿼리 최적화 (Query Optimization): Naive RAG의 가장 큰 패착은 사용자의 불완전한 질문을 그대로 검색에 쓴다는 것이다.

- 쿼리 확장 (Query Expansion): 질문 하나로는 세부 맥락과 관련 정보가 부족할 때 사용한다.

- Multi-Query: LLM을 이용해 하나의 질문을 다양한 관점의 여러 질문으로 뻥튀기하고 병렬로 검색한다.

- Sub-Query: 복잡한 질문은 Least-to-Most 프롬프팅을 통해 해결 가능한 작은 하위 질문들로 쪼개어 단계적으로 검색한다.

- Chain-of-Verification (CoVe): 확장된 쿼리를 다시 검증하여 환각을 줄이는 기법이라고 한다.

- 쿼리 변환 (Query Transformation): 질문의 본질을 파고듭니다.

- Query Rewrite: LLM에게 "검색하기 좋게 다시 써줘"라고 시키는 방식이다. RRR이나 BEQUE 같은 모델이 여기에 해당한다.

- HyDE (Hypothetical Document Embeddings): 질문에 대해 LLM이 '가상의 답변'을 먼저 작성하게 한다. 그리고 이 가상의 답변과 유사한 실제 문서를 찾는다. 질문-문서 간의 거리보다, 답변-문서 간의 거리가 훨씬 가깝다는 점을 이용한 기발한 아이디어이다.

- Step-back Prompting: 구체적인 질문을 추상적인 고차원 질문으로 바꿔서, 더 포괄적인 배경 지식을 검색하도록 유도한다.

- 쿼리 라우팅 (Query Routing): 질문의 성격에 따라 다른 데이터 소스나 파이프라인으로 연결해 주는 '교통정리' 역할을 수행한다.

4. 임베딩 (Embedding): 결국 검색은 벡터 간의 유사도 싸움이다.

- 하이브리드 검색 (Hybrid Retrieval): 키워드 일치에 강한 희소(Sparse, 예: BM25) 모델과 의미적 맥락을 잡는 밀집(Dense, 예: BERT) 모델을 섞어 쓴다. 희소 모델은 학습 데이터에 없던 희귀 단어나 전문 용어 검색에 강해 밀집 모델의 약점을 보완한다.

- 임베딩 모델 미세 조정 (Fine-tuning Embedding): 의료, 법률 등 특수 도메인에서는 일반적인 임베딩 모델이 성능이 급격히 하락할 가능성이 크다. PROMPTAGATOR나 LLM-Embedder 같은 연구는 LLM을 활용해 학습 데이터를 생성하거나 라벨링하여, 적은 데이터로도 도메인 특화 임베딩 모델을 만들 수 있게 한다. 특히 REPLUG는 LLM을 감독관(Supervisor)으로 삼아 검색기를 훈련시키는 방식을 제안했다.

5. 어댑터 (Adapter): 마지막으로, 모델 전체를 파인 튜닝하기 어려울 때 사용하는 방법이다. LoRA FT와 같이 외부 어댑터를 부착하여 LLM과 검색기 사이의 정렬(Alignment)을 돕는다. UPRISE는 제로샷 작업에 맞는 프롬프트를 자동으로 가져오는 경량 검색기를, PKG는 아예 검색 과정을 생략하고 쿼리에 맞는 문서를 바로 생성해 내는 방식을 제안하기도 했다.

4. Generation

문서를 잘 찾아왔다고 끝이 아니다. LLM의 추론에 용이하게 정보를 가공하면 더 정확하고 의도에 맞는 생성을 유도할 수 있다.

1. 문맥 큐레이션 (Context Curation): 검색된 문서를 그대로 다 넣는다고 능사가 아니다. 중복 정보는 LLM의 추론을 흐리고, 너무 긴 문맥은 핵심 정보를 놓치게 만든다.

- Reranking (재순위화): LLM은 인간처럼 긴 글의 시작과 끝부분에 집중하고 중간 내용을 까먹는 'Lost in the middle' 현상을 보인다. 따라서 검색된 문서들을 다시 정렬하여 가장 중요한 정보가 프롬프트의 앞이나 뒤(Edges)에 오도록 배치할 필요가 있다. 단순한 규칙(다양성, 관련성 등)을 쓸 수도 있지만, 최근에는 BERT 기반의 인코더-디코더 모델이나 Cohere rerank, bge-reranker 같은 특화된 모델을 사용하여 정밀하게 순서를 재조정한다.

- Context Selection/Compression (문맥 선택 및 압축): "관련 문서는 다다익선"이 아닐 가능성이 크다. 과도한 문맥은 노이즈를 유발하기 때문이다.

- LLMLingua: GPT-2 Small이나 LLaMA-7B 같은 소형 언어 모델(sLLM)을 사용하여, 인간은 읽기 힘들어도 LLM은 이해할 수 있는 수준으로 불필요한 토큰을 제거하고 압축한다. 이는 LLM을 따로 학습시킬 필요 없이 프롬프트 길이를 획기적으로 줄인다.

- Filter-Reranker: sLLM을 필터(Filter)로 사용해 쉬운 문서를 거르고, LLM을 리랭커(Reranker)로 활용해 어려운 문서를 재배열하는 파이프라인을 구축하여 효율을 높이기도 한다.

2. LLM 미세 조정 (LLM Fine-tuning): RAG 시스템의 성능을 극대화하기 위해 생성 모델(Generator) 자체를 튜닝하는 전략이다.

- 도메인 및 형식 최적화: 특정 도메인의 데이터가 부족하거나, 특수한 포맷(예: JSON 출력, 특정 말투)을 따라야 할 때 유용하다.

- SANTA: 구조화된 데이터(Structured Data)를 다룰 때 효과적이다. 검색기와 생성기 사이의 구조적, 의미적 뉘앙스를 캡슐화하기 위해 3단계 학습 과정을 거친다.

- 정렬 (Alignment): LLM의 출력을 사람의 선호도나 검색기의 특성에 맞추는 과정이다.

- RLHF (Reinforcement Learning): 생성된 답변에 대해 사람이 평가하거나, 검색된 문서와의 관련성을 점수화하여 강화 학습을 수행한다 .

- Distillation (지식 증류): GPT-4 혹은 이상의 강력한 모델의 출력을 학습 데이터로 삼아, 더 작은 모델을 튜닝하여 비용 효율성을 높인다.

- 협업 미세 조정 (Collaborative Fine-tuning): 검색기와 생성기를 따로따로 노는 게 아니라, 서로 합을 맞추도록 함께 튜닝한다.

- RA-DIT: 검색기와 생성기 간의 점수 함수를 KL 발산(Kullback-Leibler divergence)을 이용해 정렬한다. 즉, 생성기가 선호하는 문서를 검색기가 더 잘 찾아오도록 서로 피드백을 주고받으며 학습하는 방식이다.

5. Augmentation Process in RAG

표준적인 RAG는 "검색 한 번 -> 생성 한 번"이라는 단순한 단계를 거친다. 하지만 복잡한 추론이나 다단계 지식이 필요한 문제에서는 이 방식이 불충분할 때가 많다. 따라서 최근의 연구들은 검색 과정을 반복적(Iterative), 재귀적(Recursive), 적응형(Adaptive)의 세 가지 프로세스로 나누어 최적화하는 방안을 제시하며 큰 주목을 받고 있다.

A. 반복 검색 (Iterative Retrieval)

반복 검색은 LLM이 답변을 생성하는 동안 지식 베이스를 여러 번 검색하여 문맥을 풍부하게 만드는 방식이다.

- 작동 방식: 초기 쿼리로 검색을 하고, 그 결과와 현재까지 생성된 텍스트를 바탕으로 다시 검색을 수행한다. 이 과정을 반복하며 지식을 점진적으로 구체화한다.

- 장점: 한 번의 검색으로는 놓칠 수 있는 추가적인 문맥 정보를 제공하여 답변의 견고성(Robustness)을 높인다.

- 대표 연구: ITER-RETGEN은 "검색이 생성을 돕고, 생성이 다시 검색을 돕는" 시너지 효과를 얻고자 한다. 생성된 내용이 다음 검색의 문맥이 되어 더 관련성 높은 정보를 찾아오는 선순환 구조를 만든다.

- 한계: 반복 과정에서 의미가 끊기거나(Semantic discontinuity), 불필요한 정보가 누적될 위험이 있다.

B. 재귀적 검색 (Recursive Retrieval)

재귀적 검색은 검색 결과를 바탕으로 쿼리를 점점 더 구체화하거나, 복잡한 문제를 작은 단위로 쪼개어 파고드는 방식이다 .

- 쿼리 정제: 초기 검색 결과가 만족스럽지 않을 때, 그 결과를 피드백 삼아 검색 쿼리를 수정하고 다시 검색한다. 이는 사용자가 검색 엔진에서 원하는 정보가 나올 때까지 검색어를 바꾸는 것과 비슷하다.

- 대표 연구:

- IRCoT (Information Retrieval with Chain-of-Thought): CoT(생각의 사슬) 추론 과정을 사용하여 검색을 가이드하고, 검색된 결과로 다시 CoT를 정제한다.

- ToC (Tree of Clarifications): 모호한 질문이 들어오면 '명확화 트리'를 생성하여 질문의 의도를 구체화하고 최적화한다.

- 구조적 활용: 계층적 인덱스(Hierarchical Index)를 사용하여 처음에는 문서의 요약본을 검색하고, 그다음 구체적인 섹션으로 들어가는 방식도 여기에 포함된다. 지식 그래프에서 꼬리에 꼬리를 무는 '멀티 홉(Multi-hop)' 검색도 재귀적 검색의 일종이다.

C. 적응형 검색 (Adaptive Retrieval)

적응형 검색은 RAG 시스템이 "언제 검색할지" 혹은 "검색이 필요한지"를 스스로 판단하는 가장 지능적인 방식이다. 불필요한 검색을 줄여 효율성을 높이고, LLM이 자신의 지식만으로 충분할 때는 검색을 건너뛴다.

- 에이전트적 접근: LLM이 도구(Tool)를 사용하는 에이전트처럼 행동한다. AutoGPT나 Toolformer처럼, 모델이 필요하다고 판단할 때만 검색 API를 호출한다.

- 대표 연구:

- WebGPT: 강화학습을 통해 GPT-3가 검색 엔진을 스스로 사용하고, 결과를 브라우징하며 참고 문헌을 인용하도록 훈련시켰다.

- FLARE: 생성 과정에서 모델의 '자신감(Confidence)'을 모니터링한다. 만약 생성하려는 단어의 확률이 낮으면(자신감이 없으면), 그때 검색 시스템을 가동하여 정보를 찾아온다.

- Self-RAG: 가장 주목받는 모델 중 하나로, '성찰 토큰(Reflection Tokens)'을 도입했다. 모델이 텍스트를 생성하면서 "Retrieve(검색해)", "Critic(평가해)" 같은 토큰을 스스로 내뱉으며 검색의 필요성을 결정하고, 생성된 답변의 품질을 셀프 검증한다. "Self-RAG의 설계는 추가적인 분류기나 자연어 추론(NLI) 모델에 대한 의존 필요성을 제거하여, 검색 메커니즘을 언제 작동시킬지에 대한 의사결정 과정을 간소화하고 정확한 응답을 생성하는 데 있어 모델의 자율적인 판단 능력을 향상시켰다는 점에서 의미가 있다.

6. Task and Evaluation

RAG 기술이 성숙해짐에 따라, 이를 적용하는 분야(Task)가 넓어지고, 성능을 측정하는 방법론 또한 고도화되고 있다.

A. 하위 과제 (Downstream Task)

RAG의 핵심 응용 분야는 여전히 질의응답(QA)이지만, 그 양상이 훨씬 복잡하고 다양해졌다.

-

QA의 심화: 전통적인 단답형(Single-hop) 질문을 넘어, 여러 문서의 정보를 종합해서 추론해야 하는 멀티 홉(Multi-hop) QA, 특정 도메인 지식이 필요한 도메인 특화 QA, 그리고 긴 호흡의 답변을 생성해야 하는 Long-form QA 등으로 세분화되었다.

- 영역 확장: RAG는 이제 QA를 넘어 텍스트 요약(Summarization), 정보 추출(Information Extraction, IE), 대화 생성(Dialogue Generation), 코드 검색(Code Search) 등 다양한 자연어 처리 과업으로 영토를 확장하고 있다.

위 표를 보면 RAG가 상식 추론, 사실 검증, 기계 번역 등 생각보다 훨씬 방대한 영역에서 쓰이고 있음을 알 수 있습니다.. 너무 많아서 설명은 생략하겠습니다. 원문을 참조해주시면 감사드리겠습니다.

B. 평가 목표 (Evaluation Target)

과거에는 정답 일치 여부(EM)나 F1 점수 같은 전통적인 지표에 의존했다. 하지만 RAG는 검색과 생성이 결합된 복합 시스템이므로, 이 두 가지 축을 분리해서 평가하는 것이 표준이 되었다 .

- 검색 품질 (Retrieval Quality): 검색 모듈이 유용한 문서를 얼마나 잘 찾아왔는가? 추천 시스템에서 쓰이는 Hit Rate, MRR, NDCG 같은 지표를 사용하여, 정답 문서가 상위 랭킹에 포함되었는지를 측정한다 .

- 생성 품질 (Generation Quality): 생성 모듈이 문맥을 잘 반영하여 답변했는가? 정답 라벨이 없는 경우(Unlabeled)에는 답변의 충실성(Faithfulness)과 관련성(Relevance)을 보고, 라벨이 있는 경우에는 정확도(Accuracy)를 측정한다 .

C. 평가 측면 (Evaluation Aspects)

이 논문은 RAG 평가를 위해 3가지 품질 점수와 4가지 필수 능력이라는 구체적인 기준을 제시한다.

- 3대 품질 점수 (Quality Scores):

- 문맥 관련성 (Context Relevance): 검색된 문서가 질문과 진짜 관련이 있는가? 불필요한 정보는 비용을 높이고 LLM을 혼란스럽게 하므로, 정밀한 검색이 필수적이다.

- 답변 충실성 (Answer Faithfulness): 생성된 답변이 검색된 문맥에 철저히 근거하고 있는가? 이는 RAG의 가장 큰 적인 '환각(Hallucination)'을 잡아내는 핵심 지표로, 답변이 문맥과 모순되지 않는지를 본다.

- 답변 관련성 (Answer Relevance): 답변이 사용자의 질문 의도에 부합하는가? 엉뚱한 동문서답을 하지 않고 핵심을 찌르는지 평가한다.

- RAG 시스템의 4대 필수 능력 (Required Abilities):

- 노이즈 강건성 (Noise Robustness): 질문과 관련은 있지만 정답 정보는 없는 '노이즈 문서'가 섞여 있어도 흔들리지 않는가?

- 소극적 거부 (Negative Rejection): 검색된 문서들에 정답이 없을 때, 억지로 지어내지 않고 "정보가 부족하여 알 수 없습니다"라고 거절할 수 있는가? 이는 시스템의 신뢰도와 직결된다.

- 정보 통합 (Information Integration): 여러 문서에 흩어진 단편적인 정보들을 종합하여 복잡한 질문에 대한 답을 구성할 수 있는가?

- 반사실적 강건성 (Counterfactual Robustness): 문서 안에 명백히 잘못된 정보(Known inaccuracies)가 포함되어 있을 때, 이를 식별하고 무시할 수 있는가?

D. 평가 벤치마크 및 도구 (Benchmarks and Tools)

이러한 복잡한 지표들을 사람이 일일이 채점하는 것은 불가능하다. 따라서 LLM을 심판관(Judge)으로 활용하는 자동화된 평가 프레임워크들이 등장했다.

-

벤치마크: RGB, RECALL, CRUD 등은 RAG의 필수 능력(강건성, 정보 통합 등)을 측정하기 위해 설계된 데이터셋이다.

- 자동화 도구: RAGAS, ARES, TruLens 같은 도구들은 문맥 관련성, 답변 충실성 등의 품질 점수를 정량적으로 계산해 준다. 이들은 RAG 파이프라인을 개선할 때 나침반과 같은 역할을 한다.

7. Discussion and Future Prospects

논문은 RAG의 미래에 대해 몇 가지 흥미로운 화두와 향후 연구 방향을 제시한다.

1. RAG vs 긴 문맥 (RAG vs Long Context)

최근 LLM의 컨텍스트 윈도우가 20만(2025년 현재는 100만..) 토큰 이상으로 급격히 확장되면서, "LLM이 책 한 권을 통째로 읽을 수 있는데 굳이 RAG가 필요한가?"라는 의문이 제기되고 있다. 하지만 논문은 RAG가 여전히 대체 불가능한 역할을 한다고 주장한다.

- 효율성: 긴 문맥을 한 번에 처리하는 것은 추론 속도를 느리게 만든다. 반면 RAG는 필요한 정보만 청크 단위로 가져오므로 훨씬 효율적이다.

- 투명성: 긴 문맥을 읽고 생성한 답변은 내부 처리가 블랙박스인 반면, RAG는 참조 문서를 명확히 제시하므로 사용자가 답변을 검증할 수 있다.

2. RAG 강건성 (RAG Robustness)

검색된 정보에 노이즈나 잘못된 정보가 섞여 있을 때 RAG 품질이 저하되는 문제는 여전하다. 저자들은 "잘못된 정보는 정보가 없는 것보다 더 나쁘다(Misinformation can be worse than no information at all)"라고 경고한다. 흥미로운 점은 관련 없는 문서가 포함되었을 때 오히려 정확도가 30% 이상 증가했다는 연구 결과도 있다는 것이다. 이는 우리가 "노이즈"라고 부르는, "관련 없어 보이는" 정보들이 무조건 해로운 것이 아니라, 경우에 따라 모델의 추론 다양성을 높일 수도 있음을 시사한다. 따라서 검색된 정보와 생성 모델을 어떻게 유기적으로 통합할지에 대한 연구가 더 필요하다.

3. 하이브리드 접근 (Hybrid Approaches)

RAG와 파인 튜닝(Fine-tuning)을 결합하는 것이 대세가 되고 있다.

- RAG와 파인 튜닝을 순차적으로 할지, 교대로 할지, 아니면 처음부터 끝까지(End-to-End) 공동 학습시킬지에 대한 최적의 조합을 찾는 것이 연구 과제다.

- 또한, CRAG처럼 경량화된 평가 모델을 도입하여 검색 품질을 판단하게 하는 등, RAG 시스템 내에 작은 전문 모델(+ sLLM)을 통합하는 추세도 나타나고 있다.

4. RAG의 스케일링 법칙 (Scaling Laws of RAG)

LLM은 GPT3 이후로, 모델, 즉, 파라미터 수가 커질수록 성능이 좋아진다는 '스케일링 법칙'이 확립되어 있지만, RAG에도 이것이 적용되는지는 미지수다.

- 오히려 작은 모델이 일부 큰 모델보다 더 나은 성능을 보일 수 있다는 '역 스케일링 법칙(Inverse Scaling Law)'의 가능성도 제기되고 있어, 이에 대한 심도 있는 조사가 필요해보인다.

5. 상용화 준비 및 생태계 (Production-Ready RAG & Ecosystem)

RAG가 실험실을 벗어나 실제 서비스(Production)로 나아가기 위한 요건들이다.

- 엔지니어링 과제: 대규모 지식 베이스에서의 문서 재현율(Recall) 향상, 검색 속도 개선, 그리고 데이터 보안(LLM이 실수로 민감 정보를 유출하지 않도록 하는 것) 등이 해결해야 할 숙제이다.

- 생태계: LangChain, LlamaIndex 같은 도구들이 RAG 개발의 표준 기술 스택으로 자리 잡았으며, Flowise AI 같은 로우코드 플랫폼이나 Weaviate Verba 같은 개인화 비서 서비스 등으로 생태계가 분화하고 있다.

- 발전 방향: 맞춤화(Customization), 단순화(Simplification), 전문화(Specialization)의 세 가지 방향으로 기술 스택이 진화하고 있다.

6. 멀티모달 RAG (Multi-modal RAG)

마지막으로 RAG는 텍스트의 경계를 넘어 다양한 모달리티로 확장 중이다.

- 이미지: RA-CM3나 BLIP-2처럼 텍스트와 이미지를 함께 검색하고 생성하는 모델들이 등장했다 .

- 오디오/비디오: 음성을 텍스트로 변환할 때 외부 지식을 참조하거나(UEOP), 비디오의 타임라인을 예측하고 설명하는 데 RAG를 활용한다.

- 코드: 개발자의 의도에 맞는 코드 예제를 검색하여 프로그래밍을 돕는 RBPS 같은 모델이 있다. 구조화된 지식을 다루는 CoK(Chain of Knowledge)는 지식 그래프에서 팩트를 추출해 코드 생성이나 추론을 돕는다.

8. Conclusion

이 논문은 RAG가 단순히 "검색해서 붙여넣기"하는 기술을 넘어, LLM의 한계를 보완하고 외부 지식을 능동적으로 활용하는 거대한 인지 아키텍처로 진화하고 있음을 보여준다.

초기 RAG가 문서를 잘 찾아오는 것에 집중했다면, 이제는 모듈화된 구조 속에서 검색과 생성을 정교하게 조율하는 방향으로 나아가고 있다. LLM의 문맥 윈도우가 아무리 늘어나도, 인간이 모든 책을 외우고 다닐 수 없듯 방대한 외부 지식을 효율적으로 참조하는 RAG의 가치는 사라지지 않을 것이다. 앞으로 RAG는 더욱 다양한 모달리티와 결합하며 AI의 실질적인 문제 해결 능력을 높이는 핵심 엔진으로 자리 잡을 것이라 기대한다.

이 논문은 실무에서 핵심 기술로 쓰이는 RAG의 진화 과정과 향후 발전 방향까지, 가이드라인을 제시해주고 있습니다.

꼭 논문을 읽어보시고 reference를 따라 여러 최신 방법론들도 함께 살펴보시기 바랍니다.

저 개인적으로도 약 2년 전, 석사로 연구원 생활을 시작할 때 이 논문을 읽고 전체적인 흐름을 파악하는 데 도움을 얻었습니다.

실제로 RAG를 다루며 연구도 해보고 기업과 프로젝트로 응용 시스템도 개발한 경험이 쌓인 뒤에 이 논문을 읽으니 또 새롭네요.

감히 한 말씀 드리자면, RAG의 핵심은 결국 내가 풀고자 하는 문제와 주어진 자원(데이터 등)에 맞는 방법론을 설계하는 것이 중요하다고 생각합니다. RAG는 여러 요소를 탈부착해가며 자신의 설계에 맞게 조립해볼 수 있다는 장점이 있습니다. 그만큼 다양한 시도와 응용이 가능하기에 충분히 많은 시도를 해가며 내가 풀어야하는 문제에 맞는 최적의 설계도를 찾아 나가는 과정을 경험해보시기 바랍니다! (꼭 sota라고 다 좋은 것도 아니죠.)

모든 학생, 연구자, 실무자 분들 응원합니다!

https://arxiv.org/abs/2312.10997

Retrieval-Augmented Generation for Large Language Models: A Survey

Large Language Models (LLMs) showcase impressive capabilities but encounter challenges like hallucination, outdated knowledge, and non-transparent, untraceable reasoning processes. Retrieval-Augmented Generation (RAG) has emerged as a promising solution by

arxiv.org